May 16, 2017

By: Michael Feldman

Amid all the fireworks around the Volta V100 processor at the GPU Technology Conference (GTC) last week, NVIDIA also devoted a good deal of time to their new cloud offering, the NVIDIA GPU Cloud (NGC). With NGC, along with its new Volta offerings, the company is now poised to play both ends of the cloud market: as a hardware provider and as a platform-as-a service provider.

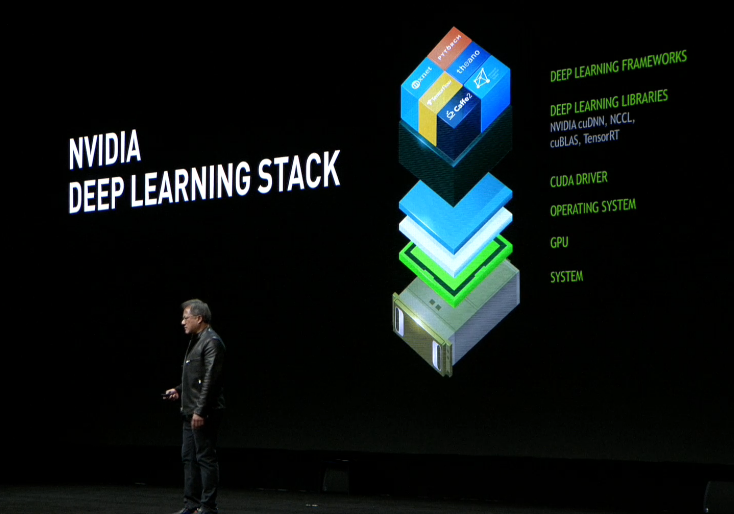

At the heart of NGC is a set of deep learning software stacks that can sit atop NVIDIA GPUs – not just the new Tesla V100, but also the P100, or even the consumer-grade Titan Xp. The stack itself is comprised of popular deep learning frameworks (Caffe, Microsoft Cognitive Toolkit, TensorFlow, Theano and Torch), NVIDIA’s deep learning libraries (cuDNN, NCCL, cuBLAS, and TensorRT), the CUDA drivers, and the OS. The various stacks are containerized for different environments using NVDocker (a GPU-flavored wrapper for Docker), and those stacks are then collected in a cloud registry.

Source: NVIDIA

Source: NVIDIA

The value proposition here is providing a big choice of integrated stacks that can be used to run deep learning applications in many different environments (as long as there is a good-sized Pascal or Volta NVIDIA GPU sitting in the hardware). For an application developer, composing a coherent stack from scratch can be a chore, given the variety of deep learning frameworks and their dependencies on libraries, drivers, and the operating system. And keeping up with the latest versions of all these software components – “arguably the most complex stack of software the world has ever seen,” says NVIDIA CEO Jen-Hsun Huang – adds another daunting layer of complexity. With NGC, NVIDIA removes all this fiddling with software.

NGC allows you to run your deep learning application either locally, on your own PC or DGX system, or remotely in the cloud. In fact, a typical progression would be to run your application on an in-house machine and then burst it into the cloud when greater scale is needed. “This is really the world’s first hybrid deep learning cloud computing platform,” noted Huang.

After you figure out if you want to run locally or remotely, you select the appropriate stack for the runtime environment, along with your deep learning application and your dataset. If you are running in the cloud, you will have a number of choices. A demonstration during Huang’s GTC keynote illustrated a selection of NVIDIA’s in-house DGX SATURNV supercomputer, Microsoft Azure GPU instances, or AWS GPU instances. It’s not clear if the SATURNV will be generally available as public resource, but the demo implies that it will. If so, NVIDIA would be able to charge users both for their cloud platform and the underlying infrastructure.

Beta testing on NGC will begin in July, with pricing to be determined at a future date.

NVIDIA will also use the new Volta V100 GPU to gain a bigger foothold in the cloud hyperscale space. At GTC, Amazon said it was already committed to adding the V100 into its cloud offerings as soon as NVIDIA starts cranking them out. “We’ll make Volta available as the foundation for our next general-purpose GPU instance at launch,” says Matt Wood, Amazon’s General Manager for Deep Learning and AI.

Amazon has been a good customer of NVIDIA, using their GPUs in its own learning efforts for things like Alexa and for product recommendations associated with its online store. But making that technology available to cloud users on AWS is now driving additional GPU uptake at Amazon. Apparently, the current GPU instances are among the fastest growing for AWS. “Our most recent instance, the P2, is just growing like wildfire,” says Wood. According to him, its being used extensively for deep learning across many verticals – everything from medical imaging to autonomous driving.

Likewise, Microsoft has used NVIDIA GPUs to drive their deep learning training on Azure for several years now. Jason Zander, Microsoft corporate VP for Azure, noted that GPUs form the basis for their natural language translation capability in Skype. “That’s one of the most sophisticated language deep neural nets that’s out there,” says Zander. “It’s really cool. I can talk to someone in English and they can hear it in Chinese. We can’t do that without the power of the cloud and GPUs.”

Microsoft is also likely to pick up the enhanced HGX-1 GPU expansion box for the cloud, which will soon be available with V100 GPUs. The HGX-1 was co-designed by Microsoft to offer a “hyperscale GPU accelerator chassis for AI.” The original HGX-1, announced in March, came with eight P100 GPUs, which can be expanded to a four-chassis system containing 32 GPUs. When such a system is built with the new V100s, that mini-cluster will deliver 3.8 petaflops of “deep learning” performance.

Source: NVIDIA

Source: NVIDIA

Amazon and Microsoft, along with most of the other cloud providers and their users, are employing GPUs for the training of the deep neural networks. But NVIDIA wants to expand on that success with its 150-watt V100 offering. As we wrote last week, this low-power version offers 80 percent of the performance of the full 300-watt V100 part, and is aimed at the inferencing side of deep learning. That means NVIDIA is looking to sell these low-power V100s in hyperscale-sized allotments to the big cloud providers.

NVIDIA has targeted this area before, with its Maxwell M4 and M40 GPUs, and more recently with the Pascal P4 and P40 GPUs. But the new V100 offers much better performance and lower latency, than any of its predecessors. It also has upgraded the TensorRT library for Volta, which can now compile and optimize a trained neural network for ultra-fast inferencing using the V100’s Tensor Cores.

Although 150 watts is a fairly high power draw for an accelerator aimed at commodity cloud servers, the rationale is that the V100 is able to perform a lot more inferencing throughput per server than competing solutions, thus saving on overall datacenter costs. According to NVIDIA, just 33 nodes of P100-accelerated servers can inference 300 thousand images per second. They estimate that’s about 1/15 as many servers as would be needed by CPU-only machines.

Inferencing, though, is increasingly using more specialized hardware to maximize performance and minimize power usage. Microsoft, for example, is employing FPGAs for this task, while Google has turned to its own custom-built Tensor Processing Unit (TPU). Additional purpose-built solutions from the likes of Graphcore and Intel/Nervana are also in the works. Whether low-power V100s can compete in this environment remains to be seen, but at least for the time being, NVIDIA seems to be wagering that offering more powerful deep learning silicon, which can serve both training and inferencing, will win the day. And given the nearly insatiable demand for both these days, that could be a smart bet.