Aug. 9, 2018

By: Michael Feldman

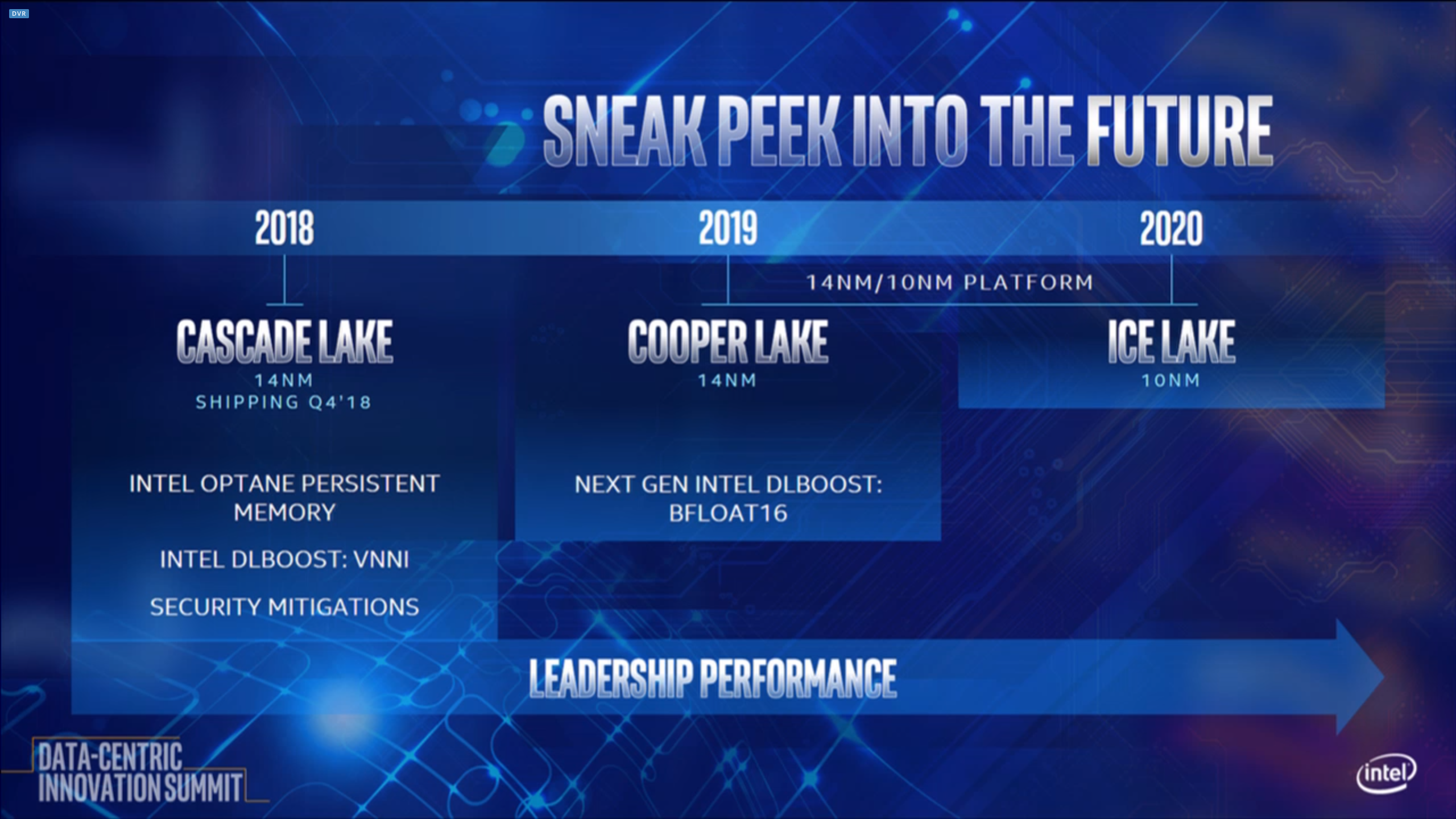

Intel used this week’s Data-Centric Innovation Summit to reveal the timeline and technology updates for its upcoming Cascade Lake, Cooper Lake and Ice Lake Xeon processors.

The Xeon timeline pretty much reflects the leaked information that we reported on two weeks ago, but Intel also used the summit to reveal more information about what we can expect from those products from a technology perspective. The more interesting tidbits have to do with enhancements for deep learning.

Source: Intel

And that starts with Cascade Lake, which will be the next Xeon processor out of the chute. It’s scheduled to start shipping in the fourth quarter of this year. As expected, it will be based on the chipmaker’s 14nm process and provide support for Intel’s newly minted Optane DC persistent memory. Also as expected, the processor will incorporate some security fixes for the Spectre and Meltdown vulnerabilities.

Cascade Lake will introduce a new set of deep learning instructions, called Variable Length Neural Network Instructions, or VNNI. This includes deep learning optimizations for INT8 (8-bit integer), which can be used for inferencing trained neural networks. Intel says the new optimizations can speed up inferencing of image recognition code by 11x compared to the current Skylake-SP Xeons and ran a ResNet-50 demo to prove the point.

As it turns out, the first iteration of VNNI was actually implemented in Knights Mill, Intel’s Xeon Phi tweaked for deep learning workloads. But given the prospects for the Xeon Phi line, it can be assumed that Xeon and the upcoming Neural Network Processor (NNP) will now take this technology forward.

Sometime in 2019, Cooper Lake will take the handoff from Cascade Lake, again on 14nm process technology. Intel was a bit stingier with the details here, offering some vague new I/O features and additional Optane memory innovations. It will be available on the new Whitley platform, which replaces Purley.

The most interesting enhancement on Cooper Lake is the added support for bfloat16, a floating point format designed to optimize deep learning computations, especially those involved with the training of the neural networks. Essentially, the format is able to squeeze a 32-bit floating point value into 16 bits by lowering the precision (same 8 bits of exponent as float32, but with just 7 bits for the mantissa). The format will also be supported in Spring Crest, Intel’s first NNP product. Interestingly, bfloat16 is currently implemented in Google’s Tensor Processing Unit (TPU).

By the way, Spring Crest is also expected to arrive in 2019, so if customers are looking for a dedicated deep learning processor that matches up better with GPUs, this will be the platform of choice. Intel is not saying how much bfloat16 and VNNI performance will be available on Cooper Lake chips, but presumably it’s not going to be so much as to eat into Spring Crest sales.

Whitney will also be the platform for Cooper Lake’s successor, Ice Lake, which we’ll get to in a moment. We bring this up to illustrate the role of Cooper Lake in the roadmap, namely to temporarily fill a gap between Cascade Lake and the much-delayed 10nm Xeon processor, which is now Ice Lake. Since AMD is expecting to ship its second-generation EPYC processor (codenamed “Rome”) in 2019 based on the its new Zen 2 microarchitecture, Intel probably felt compelled to offer something more capable than Cascade Lake in the same timeframe plus provide a chip that could be easily swapped out for the next-generation Xeon when the time came.

Note that the Rome chip will be built with 7nm technology from TSMC or GlobalFoundries, both of which are thought to be more or less equivalent to Intel’s 10nm node but significantly better than Intel’s 14nm process. Also, the second-generation EPYC will offer up to 64 cores, which Intel would be hard-pressed to match on its 14nm node, given that the 14nm Cascade Lake Xeon tops out at 28 cores.

If x86 customers find Cooper Lake wanting, they won’t have to wait long for Ice Lake, which is expected to ship in 2020. It will be Intel’s first Xeon product manufactured on its 10nm process technology. Intel isn’t saying much more than that about the future chip. However, based on the leaked roadmap information from two weeks ago, Ice Lake shipments will coincide with the rollout of the second-generation Omni-Path products, which suggest that this product will offer compatible on-chip network adapters as an option. Also expect Ice Lake to support in-package FPGA integration.

Apart from the Xeon revelations, Intel also used the event to talk up its Optane DC persistent memory, which makes a lot of sense considering this was the Data-Centric Innovation Summit. The chip giant appears to be pinning a lot of its hopes on these Optane DIMMs, which is base on Intel's and Micron's 3D XPoint non-volativle memory. And given that the product is designed to shift a lot of I/O into the memory subsystem, there is certainly the potential for huge leaps in performance, especially on disk-bound workloads.

To support that contention, Intel demonstrated an 8x reduction in query wait times for a popular analytics tool using pre-production systems equipped with Optane memory. The chipmaker also claimed a 4x increase in VMs thanks to the extra memory capacity provide by the DIMMs. And for SAP HANA jobs, the non-volatile memory was able to reduce startup times from minutes to seconds.

Early customers of the new product include Google, Tencent, Huawei, and the European Organization for Nuclear Research, aka CERN. Alberto Pace, Head of Storage at CERN is hoping that the Optane memory will solve some of the problems they’ve run up against with their in-memory catalog of Large Hadron Collider (LHC) data. Reloading from disk takes tens of minutes or even hours, says Pace, who believes this can be significantly reduced with the Optane DIMMs. Also, as new machine learning techniques are incorporated into CERN’s analytics applications, the workflows will become more reliant on the kind of large memory capacity that Optane can provide. “The performance improvements are evident,” says Pace, adding, “the diversity of applications that can profit are also very, very large.”

Keeping on the data-centric theme, Intel also talked about its efforts to bring FPGAs and silicon photonics into the datacenter. Both are poised to be critical differentiators as the competition from AMD, NVIDIA, Arm vendors, Xilinx, and IBM line up against Intel. We’ll catch up with the FPGA and silicon photonics products as soon as those roadmaps get filled out.