Aug. 9, 2016

By: Michael Feldman

On Tuesday, Intel CEO Diane Bryant revealed her company was acquiring Nervana Systems, a startup that provides deep learning software, and which was in the process of building a custom chip optimized for those same applications. Intel has been ratcheting up its focus on AI over the past couple of months, starting with the launch of its Knights Corner Xeon Phi processor at the ISC conference in June.

Intel didn’t reveal how much it agreed to pay for the two-year-old startup, but according to a Recode report, “a source with knowledge of the deal said it is valued at more than $350 million.” As the article notes, that’s a big chunk of change for 48-person startup that’s still wet behind the ears. Such a deal reflects the enthusiasm for AI technology in Silicon Valley these days, which has attracted $1 billion of venture capital funding since 2010.

Intel didn’t reveal how much it agreed to pay for the two-year-old startup, but according to a Recode report, “a source with knowledge of the deal said it is valued at more than $350 million.” As the article notes, that’s a big chunk of change for 48-person startup that’s still wet behind the ears. Such a deal reflects the enthusiasm for AI technology in Silicon Valley these days, which has attracted $1 billion of venture capital funding since 2010.

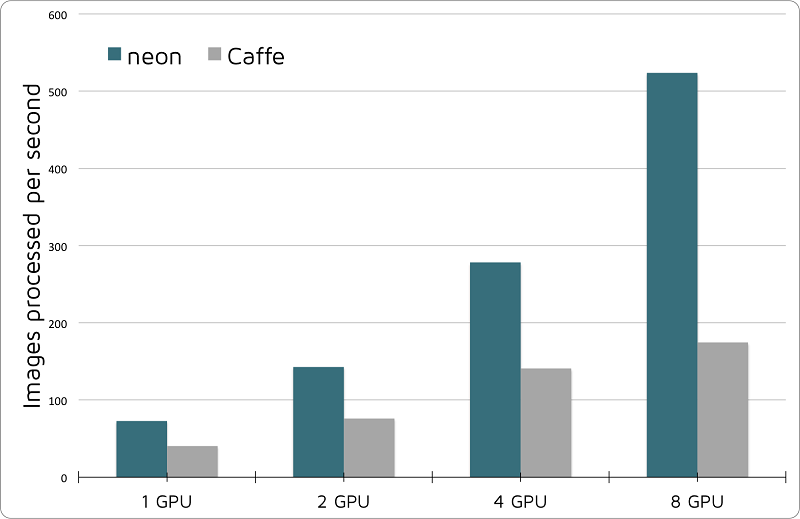

So what is Intel getting for its $350 million? Currently, Nervana offers a cloud-based service for deep learning, based on its Neon software. The company claims that for training the models, Neon is more than twice as fast as Caffe, which is probably the most widespread deep learning framework in use today. According to Nervana’s website, Neon’s superior performance is based on “assembler-level optimization, multi-GPU support, optimized data-loading, and use of the Winograd algorithm for computing convolutions.”

Certainly the Neon software was a big attraction for Intel, which at present is trailing the field in deep learning technology. On the processor side, NVIDIA dominates the space, with its deep learning-tweaked Tesla GPUs and related integration of libraries. With the introduction of Knights Landing, Intel is looking to make up some lost ground and establish itself a player in the market, but will need help on the software front.

Certainly the Neon software was a big attraction for Intel, which at present is trailing the field in deep learning technology. On the processor side, NVIDIA dominates the space, with its deep learning-tweaked Tesla GPUs and related integration of libraries. With the introduction of Knights Landing, Intel is looking to make up some lost ground and establish itself a player in the market, but will need help on the software front.

Although Intel has been touting Knights Landing performance for this application set, it’s far from clear that their chips will be able to outrun NVIDIA’s new Pascal GPUs, which provide significantly more peak FLOPS than the Knights Landing parts. The first of these GPUs, the P100, can provide more than 21 teraflops of peak performance using its half-precision (FP16) instructions, which were specifically designed for deep learning applications. They will be generally available over the next few months and will go head-to-head against Knights Landing processors, which are already shipping.

If Nervana’s Neon software and the engineers who developed it can juice the Xeon Phi performance on these same applications, that would provide Intel some much-needed leverage in dislodging NVIDIA from its leading market position. It’s worth noting that Intel has a habit of developing (or buying) system software for its own silicon, even if it subsequently open sources the code for third-party developers. In that sense, the Nervana acquisition is a nice fit with Intel’s business strategy.

In Bryant’s announcement of the Nervana buy, she implies as much, writing: “Their IP and expertise in accelerating deep learning algorithms will expand Intel’s capabilities in the field of AI. We will apply Nervana’s software expertise to further optimize the Intel Math Kernel Library and its integration into industry standard frameworks.”

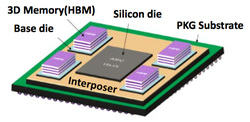

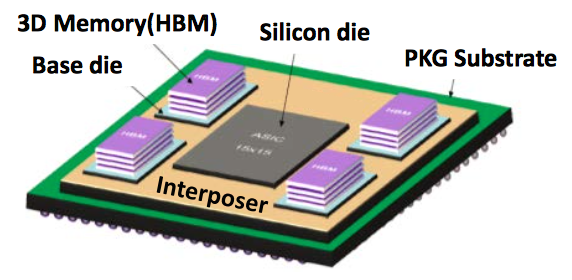

The wild card is Nervana’s deep learning chip, which the company had promised to deliver in 2017. Known as the Nervana Engine, it was going to be a fully customized ASIC that would deliver a 10 times the performance in training compared to GPUs. Like the P100 from NVIDIA, the chip would take advantage of 16-bit half precision, while throwing out all the extraneous general-purpose circuitry that isn’t required for deep learning. Also like the P100, the Nervana Engine was going to incorporate High Bandwidth Memory (HBM) technology that would provide lightening quick data access for the processor. The plan was to integrate 32 GB of HBM onto the device, which is double the amount in the current P100 parts.

The wild card is Nervana’s deep learning chip, which the company had promised to deliver in 2017. Known as the Nervana Engine, it was going to be a fully customized ASIC that would deliver a 10 times the performance in training compared to GPUs. Like the P100 from NVIDIA, the chip would take advantage of 16-bit half precision, while throwing out all the extraneous general-purpose circuitry that isn’t required for deep learning. Also like the P100, the Nervana Engine was going to incorporate High Bandwidth Memory (HBM) technology that would provide lightening quick data access for the processor. The plan was to integrate 32 GB of HBM onto the device, which is double the amount in the current P100 parts.

How Intel would use this hardware IP is unclear. With Knights Landing, the company has positioned its Xeon Phi line as the deep learning chip of choisce, so the Nervana Engine as a standalone product is probably dead. Bryant implied that parts of the design would end up in its x86 products, stating: “Nervana’s Engine and silicon expertise will advance Intel’s AI portfolio and enhance the deep learning performance and TCO of our Intel Xeon and Intel Xeon Phi processors.”

How that might play out is something of a mystery. Intel didn’t need to put Nervana’s engineers on the payroll to tell its x86 chip designers how to build FP16 instructions. And since the Xeon Phi already has a high performance memory solution with its Hybrid Memory Cube (HMC) integration, that expertise won’t be needed either. As far as removing general-purpose circuitry from its x86 offerings to fully optimize the silicon for deep learning, that’s not going to happen either. If Intel can extract any deep learning optimizations from Nervana, they are likely to be subtler in nature.

A more cynical explanation could be that Intel just wanted to kill the Nervana Engine in order to reduce the competition for deep learning hardware. But that would only make sense if it was willing to devour all the competition in this space, including Wave Computing’s Dataflow Processing Unit (DPU) and Google’s TensorFlow Processing Unit (TPU). An unlikely prospect.

One thing is for certain. There is a gold rush right now for AI technology, which some analysts think will be worth tens of billions of dollars by 2020. Besides Intel, other companies with deep pockets like Google, Amazon, Microsoft, Facebook, IBM, Apple, and others are hunting for IP that can propel their products to the front of the pack. For startups like Nervana and their investors, it’s a great time to be an AI innovator.