June 21, 2016

By: Michael Feldman

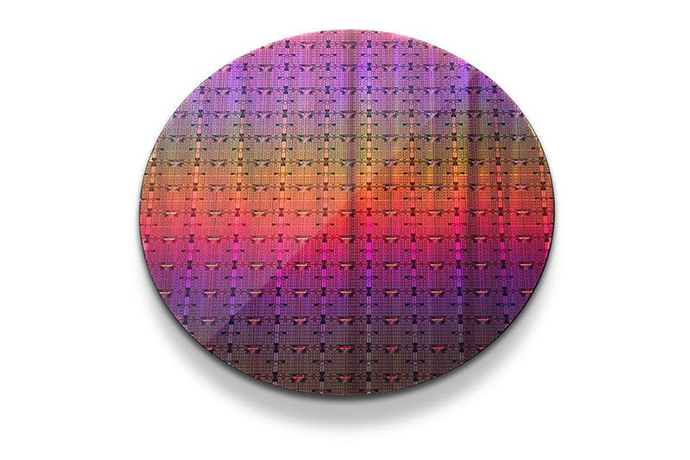

Intel’s much-awaited Knights Landing Xeon Phi processor is now being shipped in volume to OEMs and other system providers, who will soon be churning out HPC gear equipped with the new chip. And if there was any doubt, Intel made it clear that with Knights Landing, it would be going after the same set of HPC and deep learning customers that NVIDIA has been successfully courting with its Tesla GPU portfolio. The official launch of the new processor was announced at the ISC High Performance conference (ISC), which is taking place this week in Frankfurt.

Intel’s much-awaited Knights Landing Xeon Phi processor is now being shipped in volume to OEMs and other system providers, who will soon be churning out HPC gear equipped with the new chip. And if there was any doubt, Intel made it clear that with Knights Landing, it would be going after the same set of HPC and deep learning customers that NVIDIA has been successfully courting with its Tesla GPU portfolio. The official launch of the new processor was announced at the ISC High Performance conference (ISC), which is taking place this week in Frankfurt.

For the initial release, Intel will put four SKUs of the processor into production, ranging from 64 to 72 cores, and clock frequencies from 1.3 GHz to 1.5 GHz. All come with 16 GB of MCDRAM, the high bandwidth stacked memory that Intel has incorporated into the package. This in-package memory delivers 490 GB/second of bandwidth and can serve as an extra-speedy data buffer between the processor and the more plentiful DDR4 memory on the motherboard. In a nutshell, the current product set looks like this:

| Version | Cores | GHz | Power |

| 7290 | 72 | 1.5 | 245W |

| 7250 | 68 | 1.4 | 215W |

| 7230 | 64 | 1.3 | 215W |

| 7210 | 64 | 1.3 | 215W |

The top-end chip, the 7290, will provide close to 3.5 teraflops of peak double precision performance. However, this one it won’t be generally available until September. The 7210 is the least expensive and least performant of the bunch. It comes with a slight slower memory than the other three -- 2133 MHz versus 2400 MHz -- and tops out at just under 2.7 teraflops.

There will be a version of each of these processors that come with an integrated Omni-Path network adapter interface, however, those won’t be generally available until October, according the Xeon Phi Product spec sheet.

Just looking at the raw speeds, Knights Landing doesn’t match the FLOPS and memory bandwidth of NVIDIA’s new Tesla Pascal GPU, the P100. This new GPU delivers around 5 teraflops of double precision performance and up to 720 GB/sec of memory bandwidth. We take a deeper dive into that processor here.

The new 7200 Knights Landing processors managed to outrun NVIDIA’s GPUs on a number of application benchmarks, include the molecular dynamics code known as the Large-scale Atomic/Molecular Massively Parallel Simulator (LAMMPS). In this case, a server equipped with a 68-core 7250 ran LAMMPS five times faster and exhibited eight times better performance per watt, compared to a server outfitted with a Tesla K80 GPU.

Of course, with the introduction of the P100, the K80 is now the older Tesla technology, and runs about 50 percent slower than its replacement, in both peak FLOPS and memory bandwidth. Intel also claimed 5X better performance on a ray tracing visualization workload, and nearly 3X on a financial risk modeling code, but again, matched up against older GPU silicon. Hopefully, we can look forward to a more level playing field in the coming months, which will pit NVIDIA’s Pascal GPUs against Intel’s Knights Landing chips.

Intel continues to claim Xeon Phi is wrapped in a friendlier software environment compared to the competition, touting x86 compatibility and a mature suite of development tools and libraries that customers are already comfortable using. That may have been true one day, but the CUDA toolset and its associated software ecosystem have become so rich and expansive over the last 10 years, it’s not clear Intel has much of an advantage in this regard anymore.

One area where the new Xeon Phi has a clear leg up is its ability to operate as standalone processor. Although Intel will offer a coprocessor option for Knights Landing in PCIe card form factor, the majority of these devices are likely to be employed as self-hosted processors. That removes the run-time complication and overhead of transferring data back and forth between a host CPU and a coprocessor, and will make application porting to this platform more straightforward. The only downside to consider for the unified processor approach is the suitability of the throughput-oriented Xeon Phi cores to execute the serial portion of the application efficiently.

Perhaps the most interesting capability Intel is promoting with the new Xeon Phi is its ability to take on deep learning work. That’s not too surprising considering that deep learning has become the HPC application of the month by the looks of things here at ISC. At the conference on Monday, Intel VP Raj Hazra, who heads up the Enterprise & HPC Platform Group, spent a good deal his session talking up the advantages of the new Knights Landing chips on deep learning workloads, specifically, and artificial intelligence (AI), more generally. From here on out, it looks like AI is going to become a much bigger theme in Intel’s HPC organization.

With regard to the Knights Landing hardware, Hazra said that they were able to scale up a deep neural network (DNN) training application, known as AlexNet, across 128 of its 7250 Knights Landing processors, achieving a 50X speedup against a single chip. Running that same application on just four of the 7250s, Intel was able to run AlexNet more than twice as fast as a four-GPU system that was powered by NVIDIA’s somewhat dated Maxwell processors. Although Hazra made no mention of it, there’s speculation that Intel will also add a half-precision (16-bit floating point) capability to future Xeon Phi products to further optimize the platform for deep learning work.

If it turns out that Xeon Phi silicon can consistently beat their GPU competition on such workloads, and especially if it can do so at an attractive price point, NVIDIA’s dominance as the processor supplier for these DNN training clusters would come to an end. That’s not likely to happen anytime soon, given the GPU-maker’s experience with the DNN application set and the amount of resources it seems willing to invest in this emerging market.

Speculating at this point is a bit hazardous, since neither the Knights Landing processor nor the Pascal GPU are in production systems. But over the next 12 months, they will be, and it’s likely that a much clearer picture will emerge on the relative merits of each platform. Let the accelerator games begin.