By: TOP500 Team

Deep learning has reinvigorated research in machine learning and inspired a gold rush of technology innovation in a variety of fields and across a wide range of markets ranging from Internet search, to social media, to real-time robotics, self-driving vehicles, drones and more. Spanning the gamut of machine performance, deep learning (and machine learning in general) encompasses floating-point-, network- and data-intensive ‘training’ plus real-time, low-power ‘prediction’ operations. With exascale-capable parallel mappings, the training process in particular can stretch the capabilities of current and future leadership class supercomputers, while compute clusters and even Intel® Xeon processor and Intel® Xeon Phi™ coprocessor powered workstations can be used to train machines to solve complex problems and evaluate solutions en masse.

To meet the needs of deep learning and other HPC workloads, Intel has created Intel® Scalable System Framework (Intel® SSF), Intel’s holistic direction for developing high performance, balanced, power-efficient and reliable systems capable of supporting a wide range of workloads. It represents both the hardware and software plus the outline of how to incorporate yet-to-be-released reference architectures and reference designs. Reimagine your HPC Infrastructure with Intel® Scalable System Framework.

Figure 1: Intel(R) Scalable System Framework

Training ‘complex multi-layer’ neural networks is referred to as deep-learning as these multi-layer neural architectures interpose many neural processing layers between the input data and the predicted output results – hence the use of the word deep in the deep-learning catchphrase. While the training procedure is computationally expensive, evaluating the resulting trained neural network is not, which explains why trained networks can be extremely valuable as they have the ability to very quickly perform complex, real-world pattern recognition tasks on a variety of low-power devices including security cameras, mobile phones, wearable technology.

The Intel Xeon Phi processor product family is but one part of Intel SSF that will bring machine-learning and HPC computing into the exascale era. Intel’s vision is to help create systems that converge HPC, Big Data, machine learning, and visualization workloads within a common framework that can run in the data center - from smaller workgroup clusters to the world’s largest supercomputers - or in the cloud. Intel SSF incorporates a host of innovative new technologies including Intel® Omni-Path Architecture (Intel® OPA), Intel® Optane™ SSDs built on 3D XPoint™ technology, and new Intel® Silicon Photonics - plus it incorporates Intel’s existing and upcoming compute and storage products, including Intel Xeon processors, Intel Xeon Phi processors, and Intel® Enterprise Edition for Lustre* software.

COMPUTATION

Fueled by modern parallel computing technology, it is now possible to train very complex multi-layer neural network architectures on large data sets to an acceptable level of accuracy. These high-value, high accuracy recognition (sometimes better than human) trained models have the ability to be deployed nearly everywhere, which explains the recent resurgence in machine-learning, in particular in deep-learning neural networks.

Intel® Xeon Phi™ Processors

The new Intel processors, in particular the upcoming Intel Xeon Phi processor family (code name Knights Landing), promises to set new levels of training performance with multiple per core vector processing units, Intel® AVX-512 long vector instructions, high-bandwidth MCDRAM to reduce memory bottlenecks, and Intel Omni-Path Architecture to even more tightly couple large numbers of distributed computational nodes.

Intel® Xeon® Processors

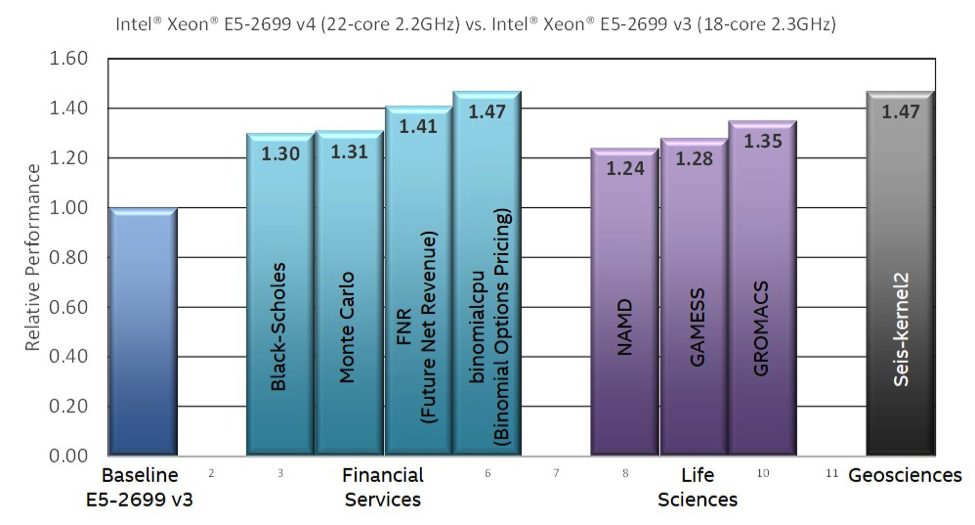

The recently announced Intel Xeon processors E5 v4 product family, based upon the “Broadwell” microarchitecture, is another component in Intel SSF that can help meet the computational requirements of organization on a variety of HPC workloads. In particular, improvements to the microarchitecture improve the per-core floating-point performance – particularly for the floating-point multiply and FMA (Fused Multiply-Add) that dominate machine learning runtimes – as well as improve parallel, multi-core efficiency. The E5 v4 product family delivers up to 47% (1) more performance on HPC workloads, which can ultimately save both time and money as far fewer machines or cloud instances need to be deployed.

Figure 2: Performance on a variety of HPC applications

COMPUTATIONAL VS. MEMORY BANDWIDTH BOTTLENECKS

The hardware characteristics of a computational node define which bottleneck will limit performance – specifically if the node will be computationally or memory bandwidth limited. A balanced hardware architecture like that of the Intel Xeon Phi processors will support many machine-learning algorithms and configurations plus a variety of HPC workloads. Computational nodes based on the new Intel Xeon Phi processors promise greatly increase floating-point performance while on package MCDRAM greatly increases memory bandwidth (2).

LOAD BALANCING ACROSS DISTRIBUTED CLUSTERS

Large computational clusters can be used to speed training and dramatically increase the usable training set size. For example Both the ORNL Titan and TACC Stampede leadership class supercomputers are able to sustain petaflop per second performance on machine-learning algorithms (3).

A challenge with utilizing multiple TF/s computational nodes like the Intel Xeon Phi processor family is that the training process can become bottlenecked by the reduction operation because the nodes are so fast. The reduction operation is not particularly data intensive, with each result being a single floating-point number. This means that network latency, rather than network bandwidth, limits the performance of the reduction operation when the node processing time is less than the small message network latency. Intel OPA specifications are exciting for machine-learning applications as they promise to speed distributed reductions through: (a) a 4.6x improvement in small message throughput over the previous generation fabric technology (4), (b) a 65ns decrease in switch latency (think how all those latencies add up across all the switches in a big network), and (c) some of the new Intel Xeon Phi processor family will incorporate an on-chip Intel OPA interface which may help to reduce latency even further.

From a bandwidth perspective, Intel OPA also promises a 100 Gb/s bandwidth, which will greatly help speed the broadcast of millions of deep-learning network parameters to all the nodes in the computational cluster. Running Lustre* over Intel OPA also promises to reduce the overhead of starting/restarting large training runs and other HPC workloads.

FOR MORE INFORMATION

Click here for more information about how recommended Intel Scalable System Framework configurations and software can speed your deep learning and other HPC workloads.

For more information on exascale-capable machine learning see:

• Chapter 7, “Deep Learning and Numerical Optimization” in High Performance Parallelism Pearls.

• Dr. Dobbs, “Exceeding Supercomputer Performance with Intel Phi”.

1 Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit http://www.intel.com/performance. Results based on Intel® internal measurements as of February 29, 2016.

2 Vector Peak Perf: 3+TF DP and 6+TF SP Flops Scalar Perf: ~3x over Knights Corner Streams Triad (GB/s): MCDRAM : 400+; DDR: 90+; See slide 4 http://www.hotchips.org/wp-content/uploads/hc_archives/hc27/HC27.25-Tuesday-Epub/HC27.25.70-Processors-Epub/HC27.25.710-Knights-Landing-Sodani-Intel.pdf

3 http://insidehpc.com/2013/06/tutorial-on-scaling-to-petaflops-with-intel-xeon-phi/