Dec. 20, 2017

By: Michael Feldman

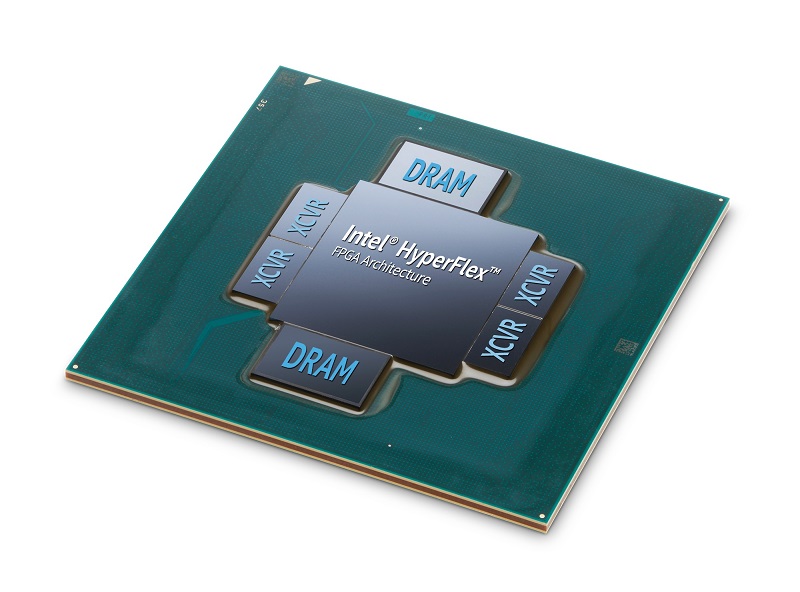

Intel has released the Stratix 10 MX, the company’s first FPGA family that includes integrated High Bandwidth Memory (HBM2).

HBM2 is comprised of a stack of DRAM chips linked to one another in parallel using thru silicon via (TSV) interconnects. The 3D design enables much faster data communication rates compared to normal 2D setups, which are limited by the number of pins that can be connected to the edge if the DRAM chips. According to Intel, HBM2 will deliver memory speeds as much as 10 times faster – up to 256 GB/second per HBM2 stack – than what would be possible with a conventional DDR4 solution. The technology also has the advantage of delivering about 35 percent better performance per watt.

HBM2 is comprised of a stack of DRAM chips linked to one another in parallel using thru silicon via (TSV) interconnects. The 3D design enables much faster data communication rates compared to normal 2D setups, which are limited by the number of pins that can be connected to the edge if the DRAM chips. According to Intel, HBM2 will deliver memory speeds as much as 10 times faster – up to 256 GB/second per HBM2 stack – than what would be possible with a conventional DDR4 solution. The technology also has the advantage of delivering about 35 percent better performance per watt.

Depending on the specific model, and Stratix 10 MX devices will be equipped with 3.25, 8, of 16 gigabytes of HBM2 memory. The FPGAs also come with a smaller amount of even speedier on-chip embedded SRAM – 45 or 90 megabits – that supplements existing on-chip block RAM.

The faster memory subsystem is designed to speed up applications that have to deal with streaming data or other types of real-time memory operations. That applies to a fairly broad range of datacenter workloads, and includes things like high performance computing, network function virtualization, and broadcasting. The HBM2 technology is especially useful when applications have to perform encryption and decryption on the fly.

“To efficiently accelerate these workloads, memory bandwidth needs to keep pace with the explosion in data” said Reynette Au, vice president of marketing, Intel Programmable Solutions Group. “We designed the Intel Stratix 10 MX family to provide a new class of FPGA-based multi-function data accelerators for HPC and HPDA markets.”

Besides all the memory wizardry, the Stratix 10 MX is equipped with between one and two million logic elements, as well as the option to add a quad-core ARM processor (on one model). The devices also come with variable-precision digital signal processing (DSP) blocks of various sizes. They can provide up to 10 teraflops of single precision performance – handy if you happen to be doing seismic imaging or processing neural networks. In fact, these FPGAs would seem to be pretty well-suited to deep learning application, thanks to the super-speedy memory.

Microsoft is using Stratix 10 hardware for the next upgrade to its AI infrastructure in Azure, but it’s not clear if any of these FPGAs are going to be based on the MX family. The inclusion of HBM2 in these devices would probably drive the cost beyond what would be feasible for a mass deployment across a vast cloud infrastructure, such as Azure. Nevertheless, for smaller installations, these FPGAs could offer an interesting alternative to GPUs for deep learning training or inferencing

The full specs for the Stratix 10 MX family are provided here.