Sept. 8, 2016

By: Michael Feldman

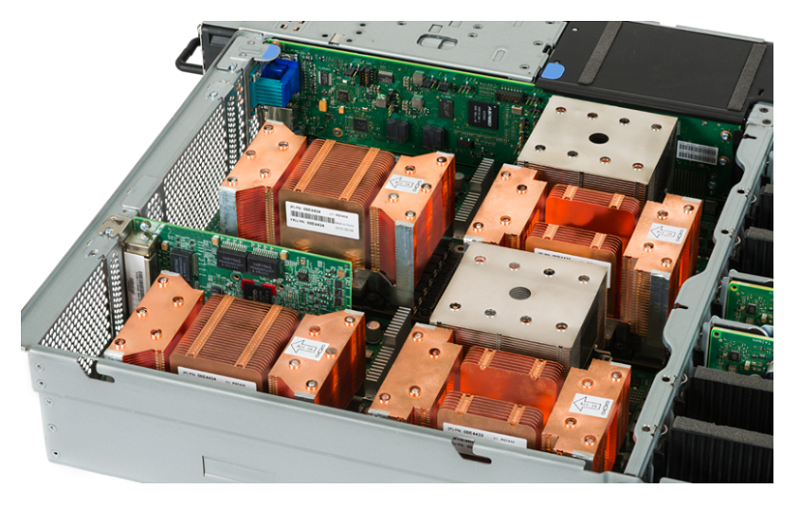

IBM has unveiled what is probably the most powerful server the company has ever offered and one of the most computationally dense on the planet. The new S822LC for High Performance Computing, as it is called, is equipped with two IBM Power8 processors and four of NVIDIA’s latest Tesla P100 GPUs. As such, IBM is first OEM to go to market with Pascal GPU-accelerated servers incorporating NVLink technology.

Being this early in the game reflects IBM’s devotion to NVIDIA as a key OpenPower partner, but more than that, it represents Big Blue’s aspirations to play competitively at the high end of the datacenter space, namely HPC, deep learning, and high performance data analytics. Without NVIDIA’s silicon, that would have been a much more difficult prospect. The Power8 CPUs are big brawny CPUs in their own right, but without accelerators like GPUs to connect to, IBM penetration into these markets would be much shallower.

To drive home that point, the four GPUs in the new S822LC represent about 97 percent of the FLOPS in that box. At more than 20 teraflops (double precision) of capability, each server houses what is essentially a supercomputer in a 2U form factor. A cluster of these with a couple of dozen nodes would probably qualify for a TOP500 placement.

GPUs aside, the 10-core Power8 is no slacker, with a fast clock (3.26 GHz) and lots of memory bandwidth (115 GB/sec per socket). The server itself can be outfitted with up to a terabyte of main memory across the two sockets. That is in addition to the 64 GB of stacked high bandwidth memory (HBM) spread across the four P100 GPU modules

But the real centerpiece technology in this product is NVLink, NVIDIA’s superfast inter-processor interconnect that dispenses bits at up to 80 GB/sec. That’s about five times faster than what can be achieved on a PCIe x16 Gen3 link, the default method of hooking CPUs to accelerators. NVLink is built into the P100 GPUs, and IBM accommodated NVIDIA by creating a new Power8 chip that incorporates the NVLink interface into its own hardware. The S822LC for HPC also has the NVLink interface built into the motherboard. Right now this is the only server in the world that supports NVLink as an inter-processor communication between CPU-to-GPU and GPU-to-GPU.

The reason this is such a big deal is not just that NVLink offers blazing fast data rates, but by doing so is able to support a unified memory space, which simplifies GPU programming considerably. The P100 offers something called the Page Migration Engine, which automates data transfers between CPU and GPU when the program needs to move data across these physical memory spaces. Thus, tasks are moved from the CPU to the GPU without the application developer having to explicitly manage the transfers. Since NVLink is there to move the data so quickly, there’s a lot less overhead involved in shuffling these tasks around. And as a result, it’s now practical to move smaller tasks to the GPU than could not be justified over a slower PCIe link.

The goal, of course, is to make applications run faster, and from that standpoint the new server seems to be proving its worth. Compared to a similarly equipped dual-socket Intel Xeon server with four of the older K80 GPUs, the new S822LC runs selected HPC codes about twice as fast, including Lattice QCD (1.9x), CPMD/Car–Parrinello Molecular Dynamics (2.25x), and SOAP-dp, a genomics code (2x). IBM also recorded a 1.75x speedup on the High Performance Conjugate Gradients (HPCG) Benchmark and a 2.4x speedup on Kinetica’s in-memory database. Similarly, a deep learning benchmark measured using AlexNet with NVCaffe showed better than a 2x speedup on the new server compared to the Facebook’s “Big Sur” box outfitted with twice as many (8) Tesla Maxwell-generation M40 GPU.

Systems have already been delivered to a handful of early customers. According to IBM, these include “a large multinational retail corporation and the US Department of Energy’s Oak Ridge National Laboratory (ORNL) and Lawrence Livermore National Laboratory (LLNL). The installations at the DOE will serve as test beds for the Summit and Sierra supercomputers that will be delivered in 2017 to Oak Ridge and Lawrence Livermore, respectively. Those future 100-plus-petaflop systems will be equipped with the next-generation processors, the IBM Power9 processor and the NVIDIA Volta GPU, but will use the same NVLink model for inter-processor communication.

As is evident from the AlexNet benchmark mentioned previously, the new S822LC also appears to be a great fit for deep learning applications, although IBM has yet to announce any early wins here. That’s likely because the big customers in this space, the hyperscale providers, tend to be rather tight-lipped about the makeup of their computing infrastructure until it’s been around for a few years. But according to Sumit Gupta, VP of the High Performance Computing and Analytics group at IBM, they have been engaged with a number of hyperscalers and are devoting a lot of attention to this application segment. “We have a huge push around deep learning right now, with a very large software team working on optimizing deep learning frameworks for this server and to take advantage of NVLink,” Gupta told TOP500 news.

And even though the Googles, Amazons, and Baidus of the world are the biggest consumers of deep learning gear at this point, Gupta thinks the area with the biggest long-term opportunity for deep learning is in IBM’s sweet spot: the enterprise. Although this segment of the market is in its early stages, Gupta said they have a few engagements with some big commercial clients here, and are working with partners to build targeted solutions for retail, banking, and automotive customers.

The S822LC for High Performance Computing can be ordered today, but won’t be generally available until September 26. According to Gupta, there is plenty of interest in the new platform, with a bunch of customers just wanting just to kick the tires with a few nodes, while others are planning to install decent-sized clusters with dozens of servers. “We have a pretty overwhelming demand right now,” he said.