April 17, 2017

By: Michael Feldman

When a poker-playing AI program developed at Carnegie Mellon university challenged a group of machine learning-savvy engineers and investors in China, the results were the same as when the software went up against professional card players: it beat its human competition like a drum. And that points to AI’s greatest strength, as well as its greatest weakness.

Even the developers of the poker-playing program probably wouldn’t have a chance against their own creation. That’s because Lengpudashi – an updated version of Libratus, which trounced four of the best poker players in world back in January – isn’t really an algorithm for poker playing. It’s an algorithm for developing a poker-playing strategy. And it develops this strategy dynamically, in real time.

Even the developers of the poker-playing program probably wouldn’t have a chance against their own creation. That’s because Lengpudashi – an updated version of Libratus, which trounced four of the best poker players in world back in January – isn’t really an algorithm for poker playing. It’s an algorithm for developing a poker-playing strategy. And it develops this strategy dynamically, in real time.

In this case, when Lengpudashi plays, it’s running on Bridges, a state-of-the-art supercomputer housed at the Pittsburgh Supercomputing Center (PSC). The software analyzes the cards it’s dealt and the bids of the other players based on a strategy generated from a generalized model that is encapsulated in a neural network. The program also does a strategy review and update every night during a match to patch algorithmic weaknesses uncovered during the day.

But there is no way to query Lengpudashi about why it executed a particular tactic during a hand. The algorithm that does this operates at a lower level than the model upon which it is based. If Lengpudashi could be induced to somehow explain its decision, the answer might be no more useful than a computer memory dump.

The enigmatic nature of poker playing AI is not much of a problem, nor would it be for something like a product recommendation engine or a language translation application. But when it comes to things like disease diagnosis, understandability becomes critical. A recent article in MIT Technology Review examining the problem the described the example of Deep Patient, a machine learning program that is able to make highly accurate diagnosis for many diseases based on patterns revealed from patient records at Mount Sinai Hospital in New York.

One disease that Deep Patient is apparently good at uncovering is schizophrenia, which is often quite difficult for trained physicians to diagnose. But since there is currently no way for the software to trace its logic, its use is somewhat limited. (Imagine the ethical and legal ramifications of treating someone for schizophrenia based on black box software.) Joel Dudley, the leader of the Deep Patient work at Mount Sinai, noted that although they can build these models, “we just don’t know how they work.” As a result, Deep Patient results have to be scrutinized by physicians to be of any sort of use in a clinical setting.

The financial community is running into the same sort of problem, but with a different set of concerns. In yet another article from MIT Technology review, the problems associated with the mysterious inner workings of AI software that determines credit risks or detects fraudulent behavior is described. In the case of Capital One, for example, the company would like to use AI to identify people who are too great a risk to be issued their credit card. But regulatory requirements constrict them from doing so since credit companies are on the hook to provide an explanation for rejecting an applicant. The company is now looking into ways to provide this evidence from the software that can satisfy these regulations. Applications like these are increasingly going to come under government scrutiny as banks and other financial institutions integrate this type of sophisticated analytics into their business.

AI in the military arena also suffers from the AI explainability problem. In a third report from MIT Technology review, the author delves into the challenges of relying on autonomous systems for military operations. As in the medical domain, this often involves life and death decisions, which again leads to similar types of ethical and legal dilemmas.

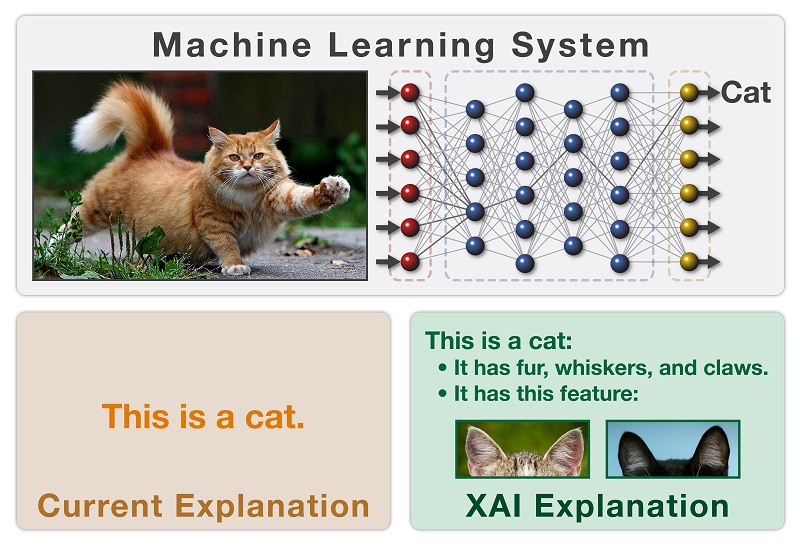

The article mentions an effort by DARPA, the US Defense Advanced Research Projects Agency, to try and develop technologies that can inject transparency into the decision-making process behind these autonomous systems. That effort has been consolidated into the Explainable Artificial Intelligence (XAI) program. XAI’s main goal is to develop techniques and tools that are able to express the rationale for decisions made autonomous agents to human users.

In a nutshell, the XAI approach is to enhance machine learning software so as to provide explanation dialogues for the end user, while maintaining the software’s performance and accuracy. The anticipated result will be a toolkit library of machine learning and human-computer interface software modules that can be employed to build explainable AI systems. The intent is to make this toolkit general-purpose enough so that it could be used across other application domains as well.

Source: DARPA

Source: DARPA

In any case, it looks like explainable AI is poised to become a much larger issue as these neural networks move from the research arena into real-world production settings where engendering human trust is mandatory. As this happens, software makers will confront one of the fundamental challenges of machine intelligence, namely that describing the logic of the analysis might end up being more difficult than the analysis itself.