Aug. 21, 2018

By: Michael Feldman

Last month, a team of researcher from University of Michigan and Zhejiang University announced that they have figured out a way to execute HPC-style matrix-vector operations on a memristor-based computer.

The announcement described how a crossbar memristor system initially used to implement artificial neural networks, was re-tasked to perform the type of matrix math used in HPC for scientific and engineering simulations. By taking advantage of the memristor’s ability to act as both a data storage medium and a computing device, the researchers think the technology has the potential to overcome the Von Neumann memory bottleneck.

The announcement described how a crossbar memristor system initially used to implement artificial neural networks, was re-tasked to perform the type of matrix math used in HPC for scientific and engineering simulations. By taking advantage of the memristor’s ability to act as both a data storage medium and a computing device, the researchers think the technology has the potential to overcome the Von Neumann memory bottleneck.

“Historically, the semiconductor industry has improved performance by making devices faster. But although the processors and memories are very fast, they can’t be efficient because they have to wait for data to come in and out,” said Wei Lu, U-M professor of electrical and computer engineering and co-author on the research paper describing the work. Lu is a co-founder of memristor startup Crossbar Inc, a technology spinout of the University of Michigan.

One of the challenges of using memristors for processing is that they are not naturally adept at high-precision math. Since they store information in the form of resistances, rather than 0s or 1s, they operate more like analog computing devices than digital ones. This makes them a good choice for things like neural networks and neuromorphic computing, but additional smarts are needed to turn those resistance levels into precise numbers.

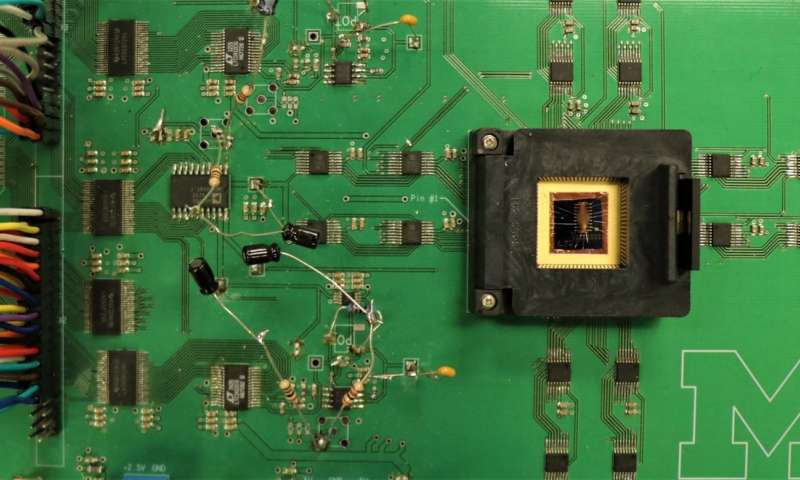

To solve this problem, the researchers built what they call a memory-processing unit, in this case, a test board that contained a 32 x 32 crossbar array of tantalum oxide-based memristors. The additional smarts consisted of digitizing the resistance of the memristors such that they mapped to 16-bit precision numbers. Once that was done, an operation that could multiply and sum the rows and columns of the grid can be performed simply by measuring the current at the end of each column. This enables the system to perform an analogue multiplication, in memory, and without the bother of having to retrieve all the data cells individually.

“We get the multiplication and addition in one step,” said Lu. “It’s taken care of through physical laws. We don’t need to manually multiply and sum in a processor,”

In their experiments, the researchers used a partial differential equation (PDE) solver employed in plasma hydrodynamics simulations. According to them, they achieved results comparable to digital PDE solvers, but with improvements in power efficiency and throughput.

In the future, they envision production systems, supporting 64-bit precision, and comprised of arrays of crossbar grids for large-scale computations. Better yet, these same systems can also be employed for neural networks and other types of lower-precision applications. The researchers say that, according to their analysis, these general-purpose machines will “considerably outperform” existing solutions, even those built from custom-built ASICs. If all of that comes to pass, memristor-based supercomputing becomes a real possibility.

Image: Memristor array on circuit board. Credit: Mohammed Zidan, Nanoelectronics group, University of Michigan.