Oct. 9, 2017

By: Michael Feldman

A team of supercomputer programmers at Lawrence Livermore National Laboratory (LLNL) is working with Procter & Gamble to help the company design better paper products.

Source: Lawrence Livermore National Laboratory

Source: Lawrence Livermore National Laboratory

The effort is part of the Department of Energy’s HPC for Manufacturing Program (HPC4Mfg), an initiative designed to make some of the fastest supercomputers in the world, and the expertise that surrounds them, available to US manufacturers. The program is spearheaded by Lawrence Livermore, but brings in other DOE labs, including Lawrence Berkeley and Oak Ridge national laboratories. The Procter & Gamble project was one of nine selected in 2016. The Procter & Gamble work was accomplished June and made public in a news release on October 4th.

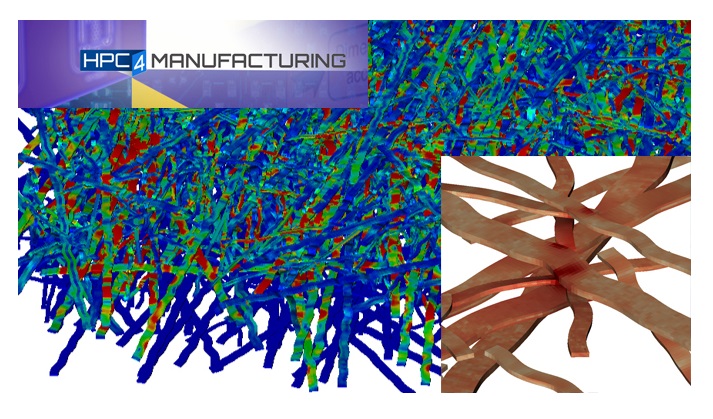

In this case, Procter & Gamble was interested in simulating the fiber structure of paper in order to manufacture products like napkins and tissues more efficiently. The goal was to reduce paper pulp in products by 20 percent, which could provide significant cost and energy savings. Procter & Gamble has its own in-house cluster, but the problem required a model that would scale beyond the practical limits of the company’s hardware.

"The problem is larger than the industry is comfortable with, but we have machines with 300,000 cores, so it's small in comparison to some of the things we run," said LLNL researcher Will Elmer. "We found that you can save on design cycle time. Instead of having to wait almost a day (19 hours), you can do the mesh generation step in five minutes. You can then run through many different designs quicker."

Elmer and his team of lab programmers developed a Python program called p-fiber, which is able to prepare the fiber geometry and meshing input needed for simulating thousands of fibers. The program relies on Cubit, a meshing tool developed at Sandia National Laboratories to generate the mesh for each individual fiber. The mesh is used as input for ParaDyn, a parallel-computing version of DYNA3D, which predicts thermomechanical behavior.

Using the model, each paper fiber might consist of as many as 3,000 finite elements, which means millions of finite elements had to be computed. According to Elmer, he and his team were able to model 15,000 paper fibers (20 million finite element), the largest simulation of this kind ever attempted. every. In the process they were able to scale the p-fiber code to supercomputing levels, as well as study the scalability of ParaDyn.

In the process, the team was able to fix some ParaDyn bugs, while doubling the code’s speed on Vulcan, a five-petaflop Power7-based supercomputer running at Lawrence Livermore. As a side note, not all of Vulcan was available to run the ParaDyn code, since the supercomputer mostly serves the NNSA’s nuclear stockpile mission. Just 30 percent machines nodes – about 1.5 petaflops – are available for industrial programs like HPC4Mfg.

No word on if Procter & Gamble intends to use the results of the effort to tweak their paper manufacturing process, or will continue to study the model. The company has the option to license p-fiber.

"There's still a lot of work to be done, but I'm happy with the way this worked," said Elmer. "I think it's gotten a lot of visibility and it's a good example of working with a sophisticated user like Procter & Gamble. It filled out the portfolio of HPC for Manufacturing at that high level. It was a good way to get the lab engaged in U.S. manufacturing competitiveness."