March 27, 2018

By: Michael Feldman

NVIDIA kicked off this year’s GPU Technology Conference (GTC 2018) with a trio of announcements about new Tesla products, including an upgraded V100, an NVLink switch, and a new DGX-2 machine learning platform.

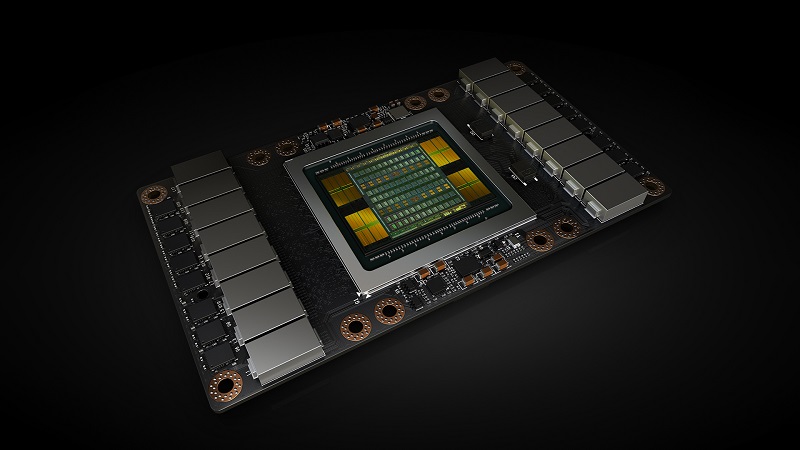

The enhancement to the V100 is a two-fold increase in local memory capacity, which brings it up to 32 GB. This is the stacked HBM2 memory that sits on the same silicon substrate as the GPU chip and offers a high bandwidth, low latency data store for the graphics processor. The additional capacity will enable data scientists to construct larger and more complex neural networks for training deep learning models, which can now easily encompass hundreds of layers and billions of parameters. And for memory-constrained HPC workloads, the extra 16 GB will also improve performance – up to 50 percent on some applications, according to NVIDIA.

The enhancement to the V100 is a two-fold increase in local memory capacity, which brings it up to 32 GB. This is the stacked HBM2 memory that sits on the same silicon substrate as the GPU chip and offers a high bandwidth, low latency data store for the graphics processor. The additional capacity will enable data scientists to construct larger and more complex neural networks for training deep learning models, which can now easily encompass hundreds of layers and billions of parameters. And for memory-constrained HPC workloads, the extra 16 GB will also improve performance – up to 50 percent on some applications, according to NVIDIA.

In all cases, the value of the larger local memory is to enable more processing to take place on the GPU, without having to keep shunting data to and from main memory on the host CPU board. In general, that should provide better performance on applications that are bottlenecked by CPU-GPU memory transfers. And yes, the fact that Intel’s upcoming Neural Network Processor (NNP) will also come equipped with 32 GB of HBM2 might have provided some additional incentive for the Nvidians to boost the V100’s memory footprint.

SAP has already evaluated the new 32 GB parts for its Brand Impact application, a machine learning code that performs real-time video analysis of brand exposure. According to Michael Kemelmakher of the SAP Innovation Center in Israel, the extra memory on the upgraded V100 GPUs “improved our ability to handle higher definition images on a larger ResNet-152 model, reducing error rate by 40 percent on average.”

Although NVIDIA didn’t mention it, somewhere along the way the NVLink version of the V100 got a marginal performance boost: from 7.5 to 7.8 in double precision teraflops and 120 to 125 in machine learning/Tensor Cores teraflops. Single precision performance was boosted by a similar amount. That doesn’t make a whole lot of difference, except an eight-GPU server can now boast a full petaflop’s worth of machine learning performance.

The 32 GB V100 is available now and will be the basis for all NVIDIA DGX systems going forward. And starting in the second half of the year, the new V100 will be available in GPU-accelerated gear from IBM, Cray, HPE, Lenovo, Supermicro, and Tyan. Oracle also announced it will be offering the upgraded V100 for its cloud customers, also in the second half of the year.

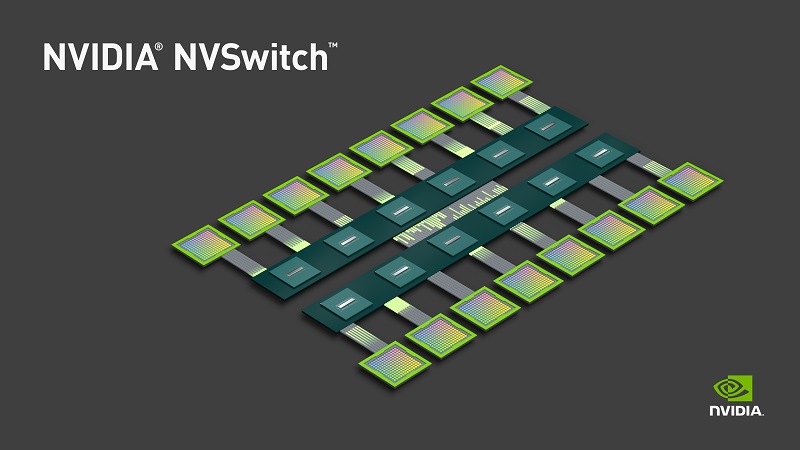

Perhaps the more important product news is the unveiling NVLink switch, which NVIDIA has dubbed NVSwitch. It’s a chip that provides 18 ports of NVLink 2.0 communications and is able to connect 16 V100 GPUs in an all-to-all topology. The TSMC-manufactured device, which sports more than two billion transistors, supports an aggregate bandwidth of 900 GB/sec. By using the memory coherency capability of NVLink, the switch fabric enables an application running on multiple GPUs to treat the aggregate HBM2 as a unified memory space.

Perhaps the more important product news is the unveiling NVLink switch, which NVIDIA has dubbed NVSwitch. It’s a chip that provides 18 ports of NVLink 2.0 communications and is able to connect 16 V100 GPUs in an all-to-all topology. The TSMC-manufactured device, which sports more than two billion transistors, supports an aggregate bandwidth of 900 GB/sec. By using the memory coherency capability of NVLink, the switch fabric enables an application running on multiple GPUs to treat the aggregate HBM2 as a unified memory space.

The switch was conceived to enable system designers to architect more scalable GPU-powered platforms, again in order to train larger and more complex neural networks. Its first design win is NVIDIA’s own DGX-2 platform, which was also unveiled at GTC. The DGX-2 will house 16 of the new memory-enhanced V100 GPUs, twice as many as can be had in the original DGX-1. And because this is a 12-chip NVSwitch fabric, each GPU will be able talk to each of the other GPUs at 300 GB/sec.

As you might suspect, the eight additional GPUs on the DGX-2 doubles the raw performance of the DGX-1, providing two petaflops of machine learning/Tensor Cores performance (or 124.8 double precision teraflops, if you’re keeping score at home). The host side gets an upgrade as well, with two Xeon Platinum CPU and 1.5 terabytes of main memory. For good measure, the system is also outfitted with 30 TB of NVMe SSDs.

By the way, the upgraded DGX also provides eight InfiniBand EDR or 100 GbE ports, which can be used to chain together multiple DGX-2 boxes. That offers the prospect of building a tightly integrated, multi-petaflop clusters, where all the GPUs are conversing over NVLink. We wouldn’t be surprised to see such a system turn up on a well-known supercomputer list come June.

NVIDIA is selling the new DGX-2 for $399,000 – a hefty increase from the switchless, eight-GPU DGX-1, which lists at $129,00. And at 10 kilowatts, the DGX-2 going to be more expensive to run than its 3.5 KW predecessor. But for the extra cost, you get a lot more performance.

NVIDIA is selling the new DGX-2 for $399,000 – a hefty increase from the switchless, eight-GPU DGX-1, which lists at $129,00. And at 10 kilowatts, the DGX-2 going to be more expensive to run than its 3.5 KW predecessor. But for the extra cost, you get a lot more performance.

According to NVIDIA, a DGX-2 system can train FAIRSeq, an advanced language translation model developed by Facebook, in about a day and half. That’s 10 times faster than what an eight-GPU DGX-1 was able to do last year using the older 16 GB V100s. Not all of that speedup is attributed to the extra GPUs and greater memory capacity, however. NVIDIA has also updated the software stack, which include some additional optimizations.

In fact, to go along with the newly announced hardware, NVIDIA has provided updated versions of CUDA, TensorRT, cuBLAS, cuDDN, NCCL, and other GPU-related middleware. The company has also enhanced its implementations of the major deep learning frameworks (TensorFlow, Caffe2, MXNET, etc.) to take advantage of the 32 GB V100, NVSwitch, and the new DGX configurations.

While NVIDIA didn’t announce any future GPU architectures at this year’s GTC, the company did try to make the case that its combo of hardware and software improvements is advancing performance at a breakneck pace. From 2013, when NVIDIA was hawking its Fermi-class M2090 GPUs, to the Volta-class V100 in 2018, deep learning codes have seen up to a 500-fold performance increase, while more traditional HPC applications have enjoyed a 25x increase. In either case, that’s quite a bit better than what Moore’s Law is providing.

And that’s probably the big takeaway from what NVIDIA has been up since the last GTC. Instead of relying on transistor shrinking, the company has employed hardware-software co-design, more specialized devices, and architectural tweaking to power its GPU business. And yet, we’re still left wondering what NVIDIA has in mind for its next GPU architecture after Volta. Perhaps next year…