May 26, 2016

By: Michael Feldman

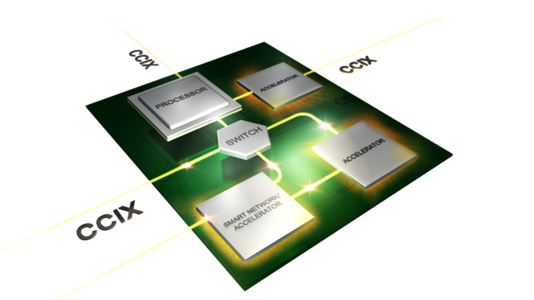

If you happen to think there aren’t enough interconnect standards for accelerators in the world, then you’ll be happy to know that one more has been added to the heap. The new technology, known as the Cache Coherent Interconnect for Acceleration (CCIX), is being crafted as an open standard and aims to provide a high performance, cache coherent data link between processor hosts and coprocessor accelerators.

In a nutshell, CCIX will allow applications to easily access data across the memory spaces of all sorts of connected special-purpose microprocessors. Cache coherency will be supported in hardware, making such accesses transparent to the application software. Unlike the PCIe bus, which has traditionally been used to hook accelerators to their CPU host, no device driver will be required. That simplifies programming considerably, while at the same time improving performance.

Although no specific details have been forthcoming regarding data transfer speeds, the CCIX website claims “higher bandwidth compared to the existing interface” (presumably a reference to PCIe) and “orders of magnitude improvement in application latency.” With claims such as those, one can surmise this technology would be applicable to traditional high performance computing (HPC), but the primary focus, at least initially, will be for accelerator usage in big data analytics, search, machine learning, network function virtualization (NFV), wireless 4G/5G, in-memory database processing, video analytics, and network processing.

Since this is being devised as an open standard, the obligatory consortium has formed. Initial backers include AMD, ARM, Huawei, IBM, Mellanox, Qualcomm, and Xilinx. The membership list suggests an ARM-Power alliance could coalesce around this technology, which could provide some needed market momentum for both camps against the Intel juggernaut. If AMD supports the CCIX standard for its x86-based Opteron processors and its discrete GPUs (or even APUs), that would broaden the scope of the interconnect in another useful way.

IBM possesses its own Power-based coherent accelerator interconnect with CAPI (Coherent Accelerator Processor Interface), so their inclusion here signals a willingness to support a more vendor-neutral approach, either as a separate interface or, more likely, as an integrated component of CAPI.

The absence of NVIDIA is perhaps a bit curious, given their plans to spread their GPU accelerators far and wide in the datacenter. For the time being though, the Nvidians appear intent on supporting their own proprietary NVLink interconnect, which hooks together multiple CUDA GPUs and, with IBM’s help, Power8 CPUs. If CCIX catches on, it’s a good bet NVIDIA will integrate the technology into their own hardware.

True to form, Intel remains the odd man out – and for good reason. The company is systematically moving away from the processor-coprocessor model of application acceleration, making external device interconnects such as CCIX superfluous. Intel’s new Knight’s Landing Xeon Phi is being offered as a standalone processor, without the need of a host (along with a PCIe-connected version, which does require one). Likewise, Intel’s April announcement of a Xeon-FPGA multichip module, using Altera technology, also dispenses with the need of an external interconnect. It’s pretty clear Intel’s strategy is to integrate accelerator smarts onto the processor or into the package whenever possible.

Thus the success of CCIX is likely to rely on the non-Intel portion of the server market. Given that from a processor perspective the company owns more than 90 percent of that space, that’s a rather tall order. Nevertheless, at this point Intel silicon does not dominate the accelerator market in the datacenter, which has provided an opening for other players, and by extension, new standards like CCIX.