By: TOP500 Team

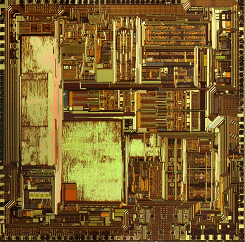

An article published this week in MIT Technology Review ponders the fate of the computing industry after Moore’s Law. The “law” is actually an observation made in 1965 by Intel cofounder Gordon Moore that transistors were shrinking with each iteration of semiconductor technology, doubling in density every year -- later modified to every 18 months, then to every two years. The article points out that Intel has paced the industry in transistor shrinking for the last 50 years, but within five years or so, semiconductor technology is going to run out of runway.

According to Thomas Wenisch, an assistant professor at the University of Michigan, the mobile computing space will feel the effects of the demise of the Moore’s Law later than the rest of the industry since the technology used in chips in those devices tend to lag those in other computers. Data center systems in particular, including hyperscale infrastructure and supercomputers, are going to be first to suffer the consequences.

According to Thomas Wenisch, an assistant professor at the University of Michigan, the mobile computing space will feel the effects of the demise of the Moore’s Law later than the rest of the industry since the technology used in chips in those devices tend to lag those in other computers. Data center systems in particular, including hyperscale infrastructure and supercomputers, are going to be first to suffer the consequences.

In fact, as Moore’s Law has slowed in recent years, its effect already appears to be impacting high performance computing. As noted in the article by Horst Simon, deputy director of Lawrence Berkeley National Laboratory, the fastest supercomputers in the world have not been advancing as quickly, from a performance standpoint, over the last three years. That impacts all the high-end research and government lab programs that have come to rely on ever-faster machines.

Overall, the entire industry has relied on Moore’s Law to continually deliver cheaper and faster computers, and, as the article describes, has provided a kind of “heartbeat” for IT vendors to keep R&D, product development, and user expectations in sync. As a result, Intel and other chipmakers know that when they deliver faster, denser processors using a more advanced manufacturing technology every two years, the market demand for those chips is already in place.

Other technologies are in the pipeline to pick up some of the slack after transistor shrinkage stops. Most of these revolve around improving the design of chips or changing the way they work. For example, more specialized processors optimized for specific workloads, like NVIDIA’s latest GPUs optimized for machine learning, will provide better application performance. As will FPGAs in some situations, where specific algorithms can be hardwired into these devices. Such solutions can certainly boost performance, but in a less predictible manner than what Moore’s Law provided.

For the complete write-up, read the article in MIT Technology Review.