July 4, 2017

By: Michael Feldman

The Jülich Supercomputing Center (JSC) has kicked off DEEP-EST, an EU-funded project that aims to build a supercomputer capable of handling both high performance computing and high performance data analytics workloads.

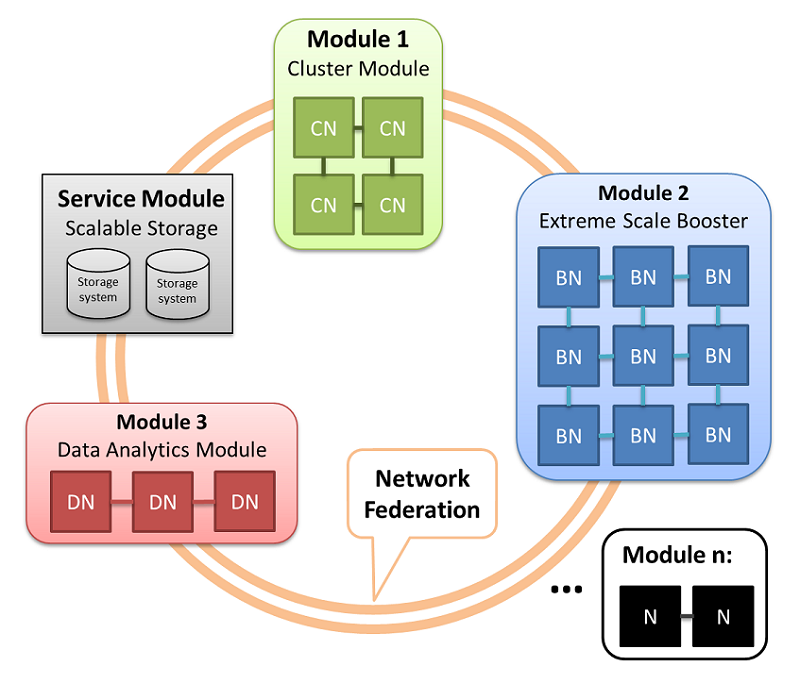

DEEP-EST is the sequel to the DEEP and DEEP-ER projects, which defined a type of heterogeneous architecture known as the “Cluster-Booster” model. Both those efforts, which are now complete, focused primarily on more traditional simulation workloads. The Cluster module, based on commodity cluster gear, was designed for codes that required limited scalability, but high levels of single-thread performance. The Booster module, which incorporated Intel’s manycore Xeon Phi processors, was targeted to massively parallel applications that are highly scalable. The DEEP-ER work included architectural elements aimed at data-intensive codes as well.

Source: Jülich Supercomputing Center

Source: Jülich Supercomputing Center

The overall rationale for the approach is that instead of using expansion cards to add compute or storage capabilities to a generic server infrastructure, these components are pooled into separate modules. As in the Cluster and Booster examples, the modules are designed to handle specific types of tasks most efficiently.

DEEP-EST continues further down the heterogeneous path, adding a Data Analytics module designed specifically for this type of work. According the JSC, the module will be comprised of FPGAs embedded in some sort of high capacity storage element. The idea is to “close a gap resulting from the different hardware requirements for high-performance computing (HPC) and high-performance data analytics (HPDA).”

Dr. Estela Suarez, JSC’s project lead on the DEEP-EST work, explains it thusly:

“For conventional supercomputing applications, such as simulations from quantum physics, an extremely large number of mathematical operations are applied to a relatively small set of data. This requires systems with a lot of computing power but relatively little storage,” she says. “But applications are becoming significantly more complex and the volumes of data from present-day experiments, for example at CERN, are increasing in size. This means that supercomputers will require drastically larger storage capacities – and they must be located as close to the processors as possible. Only then can the data be processed in a fast and energy-efficient manner.”

Six HPDA-type applications drawn from European research will be used to drive the development of the DEEP-EST module. One of these applications involves computing the behavior of solar storms and their effect on communications on Earth. So the data-intensive analysis of satellite imagery would be allocated to the data analytics module, while the solar storm simulation portion of the application would be distributed across the Cluster and Booster modules.

The end result of the project will be a prototype system that is expected to demonstrate the advantages of the modular concept. Delivery is scheduled for 2020.