By: Rob Farber

Performing apples-to-apples optimized performance comparisons between different machine architectures is always a challenge. Intel has observed that the CPU implementations of many machine learning and deep learning packages have not been fully optimized for modern CPU architectures. For this reason, Intel made a number of machine learning announcements following the recent launch of Intel® Xeon Phi™ (formerly Knights Landing) processors.

Machine learning is far more than a compute-bound problem, because the process of training algorithms (adjusting the weights in a neural network or model to achieve a minimum error) generally requires a distributed computing environment to deliver a reasonable time-to-model runtime. For this reason, the training process is flop/s, network, and memory intensive. By contrast, the evaluation (also known as scoring or inference step) of data using a trained algorithm is relatively light weight and can run very quickly, in many cases real-time, on a variety of hardware platforms.

The Intel ISC’16 announcements were phrased in terms of the network, memory, software, and compute technologies that are part of Intel® Scalable System Framework (Intel® SSF). Optimizing the CPU machine learning pathway for Intel products has greatly increased performance over previously reported results. The machine learning community can expect:

- Training: up to 38% better scaling over GPU-accelerated machine learning* and an up to 50x speedup when using 128 Intel Xeon Phi nodes compared to a single Intel Xeon Phi node*.

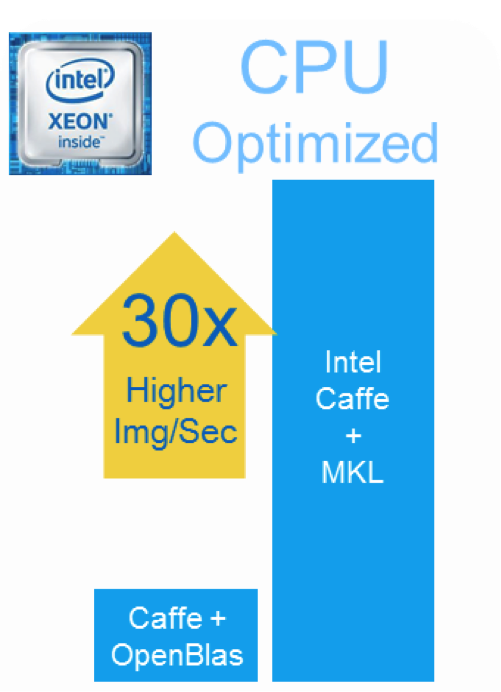

- Prediction: up to 30x improvement in scoring performance* (also known as inference or prediction) on the Intel® Xeon® processor product E5 family using the Intel-optimized Caffe framework with the latest Intel® Math Kernel Library (Intel® MKL) package. This is particularly important as Intel notes the Intel Xeon E5 processor family is the most widely deployed processor for machine learning inference in the world* as a 30x performance improvement can deliver significant time and power savings in the data center.

Software is a key part of Intel SSF, which includes contributions by Intel to the open source community. The Intel ISC’16 announcements included open-sourcing the CPU-optimized MKL DNN (deep neural network) library for machine learning. Intel created a single Internet portal for all their machine learning efforts at http://intel.com/machinelearning. Through this portal, Intel hopes to train 100,000 developers to harness the benefits of CPU-based machine learning. They are supporting this effort by giving early access to the new Intel Xeon Phi processors, Intel® Omni-path (Intel OPA) and other cutting-edge machine learning technologies to top research academics.

The new Intel Xeon Phi product family, the centerpiece of the Intel ISC’16 announcements, is important as it provides the TF/s floating-point performance needed to achieve rapid time-to-model when training machine and deep learning algorithms. To better understand the importance of Intel Xeon Phi processors and how the different components of Intel SSF benefit machine learning, see the ongoing machine learning series covering Intel Xeon Phi, Intel OPA, and the importance of Lustre to both data pre-processing and the training of machine and deep learning algorithms.

Intel scales machine learning for deeper insight

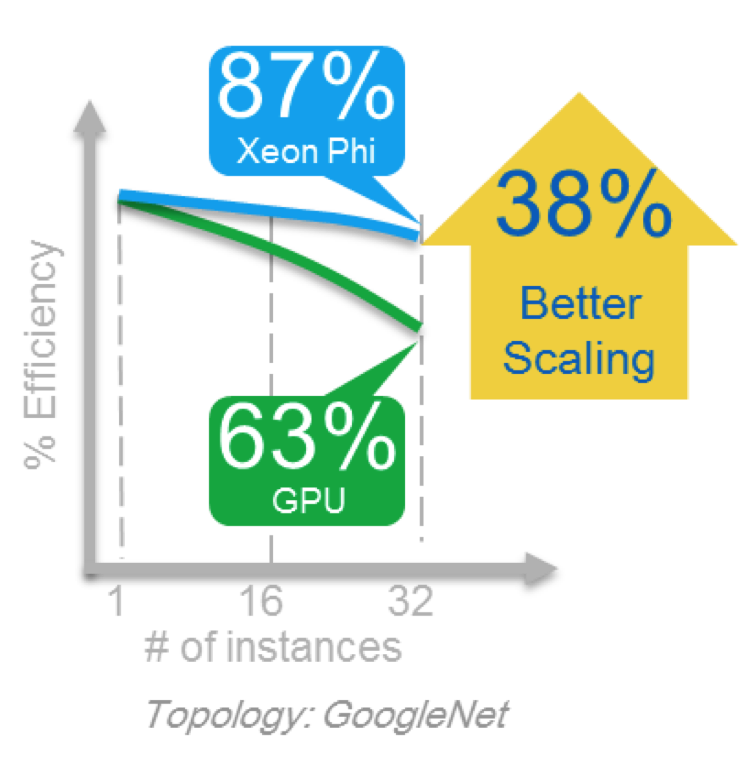

Intel benchmarks show that a combination of Intel SSF technologies provide significantly better scaling during training than GPU-based products.* Apples-to-apples scaling results show that the combined advantages of Intel Xeon Phi processor floating-point capability plus the high-bandwidth, low latency advantages of Intel OPA fabric provide an up to 38% scaling advantage on the GoogLeNet topology.

As shown in the figure below, the efficiency of the Intel blue line remains high (87%) while the efficiency of the GPU line (shown in green) drops fairly quickly (to 63%) when evaluated from 1 to 32 nodes. The values shown reflect efficiency compared to a single node of the same type, meaning the efficiency at 32 nodes for the GPU cluster is calculated based on the single node GPU performance and similarly the 32 node Intel Xeon Phi processor efficiency is calculated based on the single Intel Xeon Phi performance. Results were determined by how quickly the GoogLeNet benchmark achieved a similar model accuracy (e.g. time-to-model) between the two platforms.

Figure 1: Scaling efficiency for an Intel SSF cluster vs. a GPU cluster *

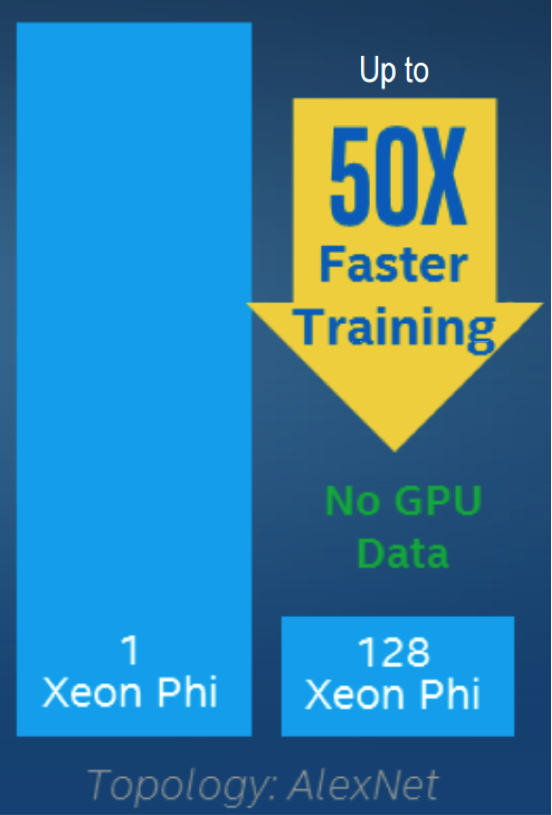

An up to 50x decrease in time-to-model* was achieved when scaling from 1 to 128 Intel Xeon Phi nodes when using AlexNet, another respected machine learning benchmark.

Figure 2: An up to 50x decrease in time-to-model compared to a single Intel Xeon Phi node *

Intel speeds scoring

The Intel announcement of an up to 30x improvement in inference performance using an Intel optimized version of the Caffe framework with MKL, compared to OpenBLAS and the default (unoptimized) Caffe. These results are based on a proof point from a proprietary customer who processes high volumes of images per second.

Figure 3: Inference speedup based on customer proof point

MKL DNN released to the open source community

Recognizing that many open-source projects are highly optimized for GPUs rather that CPUs, Intel will release in 3Q16 to the open source community the MKL DNN source code to help speed the uptake of CPU-optimized machine learning. MKL DNN performs a number of basic and important machine learning operations that are valuable for those building and running machine learning and deep learning applications. MKL DNN has no restrictions and is royalty-free to use.

The DNN performance primitives demonstrate a tenfold performance increase of the Caffe framework on the AlexNet topology [1]. For example, the primitives exploit the Intel® Advanced Vector Extensions 2 (Intel® AVX2) when available on the processor and provides all building blocks essential for implementing AlexNet topology training and classification including:

- Convolution: direct batched convolution

- Pooling: maximum pooling

- Normalization: local response normalization across channels

- Activation: rectified linear neuron activation (ReLU)

- Multi-dimensional transposition (conversion)

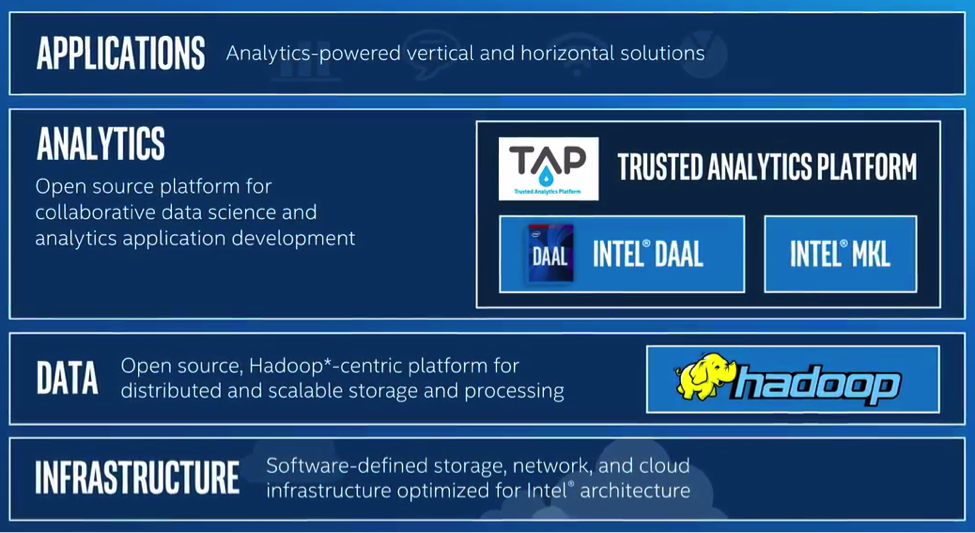

The video, “Faster Machine Learning and Data Analytics Using Intel® Performance Libraries” covers the highly optimized libraries where Intel® Data Analytics Acceleration Library (Intel® DAAL) offers faster ready-to-use higher-level algorithms and Intel MKL provides lower-level primitive functions to speed data analysis and machine learning. These libraries can be called from any big-data framework and use communications schemes like Hadoop and MPI.

Figure 4: An important building block for machine learning and data analytic applications

What is Intel Scalable System Framework

Succinctly, Intel introduced Intel SSF to reduce confusion given the wealth of new technologies now available to HPC customers, and to help them purchase the right mix of validated hardware and software technologies to meet their needs. Additionally at ISC’16, Intel launched another component of Intel SSF: Intel HPC Orchestrator software, a family of modular Intel-licensed and supported premium products based on the publicly available OpenHPC software stack that reduces the burdens of HPC setup and maintenance.

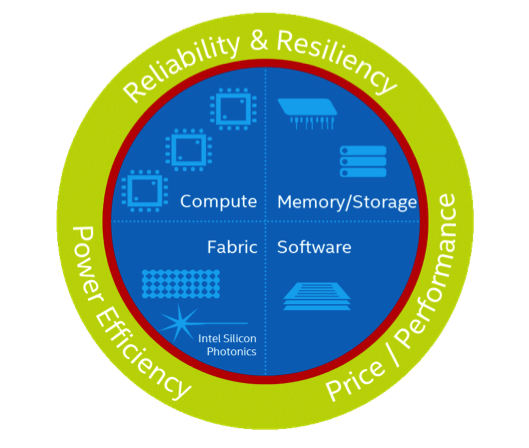

Intel HPC Orchestrator and the Intel Xeon Phi processor product family are but part of Intel SSF that will bring machine-learning and HPC computing into the exascale era. Intel’s vision is to help create systems that converge HPC, Big Data, machine learning, and visualization workloads within a common framework that can run in the data center - from smaller workgroup clusters to the world’s largest supercomputers - or in the cloud. Intel SSF incorporates a host of innovative new technologies including Intel® Omni-Path Architecture (Intel® OPA), Intel® Optane™ SSDs built on 3D XPoint™ technology, and new Intel® Silicon Photonics - plus it incorporates Intel’s existing and upcoming compute and storage products, Intel Xeon Phi processors, and Intel® Enterprise Edition for Lustre* software.

Figure 5: Intel(R) Scalable System Framework

The Intel SSF ecosystem has grown to 31 partners, including 19 system providers and 12 independent software vendors. Ultimately, Intel SFF will lead to a broad application catalog of tested HPC apps that the community can use with the confidence that the software will run well on a system using an Intel SSF configuration. This is one of the benefits of having validated system configurations that software developers can leverage to verify the performance of their applications. More information can be found at intel.com/ssfconfigurations.

Summary

Training a machine learning algorithm to accurately solve complex problems requires large amounts of data that greatly increases the computational, memory, and network requirements. Both TF/s Intel Xeon Phi processors and scalable distributed parallel computing using a high-performance communications fabric are essential technologies that makes the training of deep learning on large complex datasets tractable in both the data center and within the cloud. With the right technology mix, machine and deep learning neural networks can be trained to solve complex pattern recognition tasks - sometimes even better than humans.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national labs and commercial organizations. He can be reached at info@techenablement.com.

_______________________________________________________________________________________________________________

Product and Performance Information can be found at http://www.intel.com/content/www/us/en/benchmarks/intel-data-center-performance.html.