Nov. 7, 2017

By: Andrew Jones, VP of Strategic HPC Consulting and Services, NAG

Measuring high performance computing can be very powerful for the businesses that rely on it and the end users that directly employ it. Based on NAG’s experience helping organizations with HPC measurement, we have put together this overview of the subject for TOP500 News.

Measuring usage and costs of your current HPC capability can inform service delivery and policies to extract the optimum science or business impact from your existing set-up. Collecting data on the usage patterns, costs, and value delivered by current HPC capability can also help ensure future decisions are optimal. This enables that subsequent investments can be made with confidence, at the right scale, type and timing for maximum value, and with well understood risks. However, measuring the right things, and making sure they drive the best business or science impact is a surprisingly complex undertaking.

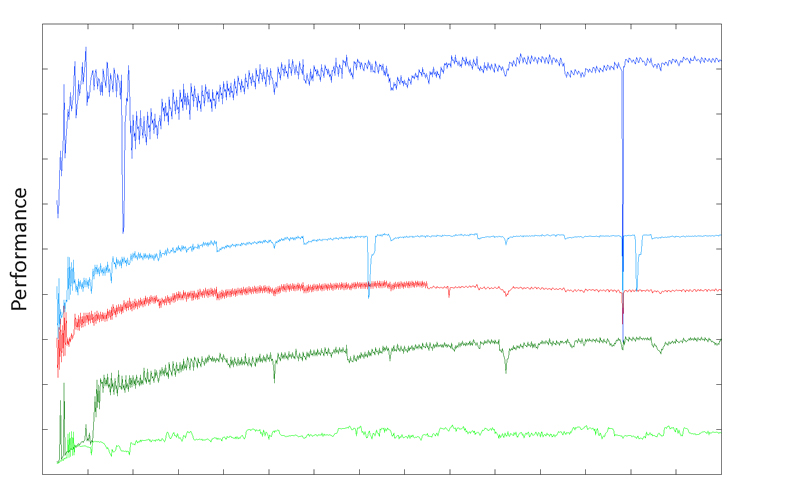

Measuring technical performance seeks answers to basic questions such as: How fast is this supercomputer? How fast is my code? How does my code scale? Which system or processor is faster? The answers are delivered through benchmarking. The process involves running well-understood test cases under specified conditions or rules and recording the appropriate performance data and associated metadata (for example, time to completion, system configuration, code build details, library versions used, system core count, node population, and network topology, to name just a few).

Benchmarks are only specific scenarios, but they are usually analyzed to extrapolate or infer more general behavior. This might include predicting the performance of a potential hardware upgrade or a new algorithm, or, alternatively, identifying a performance bottleneck. It might also be appropriate to measure the effort needed to achieve the desired performance, such as code porting and tuning.

Non-technical performance measurement seeks answers to questions such as: How well is the HPC facility/service run? How well is it used? How fair is it? This includes usage metrics, of which the most common is utilization. However, utilization on its own is a very poor headline metric for two reasons. First, it only reflects usage of the processors, not of the other important and costly components such as memory, the interconnect, resiliency infrastructure, and so on. Second, it embodies a fundamentally flawed assumption that “busier” is the same as “delivering more value!”

Measuring utilization of various system aspects does have a useful role to play in understanding system behavior. Although they are different metrics, energy and power often discussed as if they were the same thing. As a first approximation, power (peak demand) drives the cost of the infrastructure, whereas energy consumption drives the cost of ongoing operation. Each of these can be optimized, noting that optimized might not always mean reduced. For example, there may be quantifiable benefits to expending more energy to get a result faster. Optimization can be explored at hardware or software levels, or a combination of both.

Fairness and funding is about how utilization of a facility is shared among multiple users, through things like scheduling or queue setups, priority handling, and access review or allocations. It will also include a discussion of how the facility is funded, which can be subtly different to how the facility is charged. These aspects will have effects on usage patterns and thus hinder or help drive maximizing the business impact of the HPC capability.

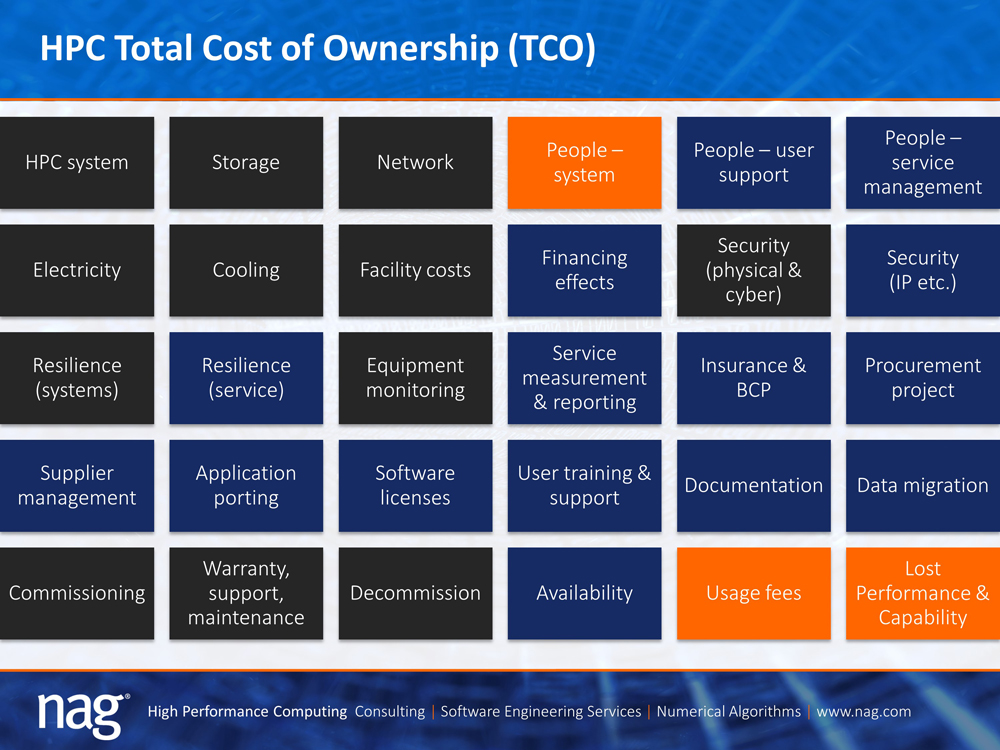

Cost measurement is an essential precursor to ensuring the costs are appropriate to the value generated, or to secure investment to unlock further business or science value. The most visible cost of HPC is the hardware purchase or, in the case of a cloud computing contract or a leased system, the monthly supplier invoice. However, the Total Cost of Ownership, (TCO) has dozens of components even with a simplified treatment. This might include hardware, storage, maintenance, staff, electricity, facility costs, security, resilience, financing effects, application porting, software licenses, user training, and more. Consideration must be given to identifying which costs can be controlled, and by whom, and which costs are borne by which budgets, and how this might change over time.

Perhaps the most important thing to measure for HPC is the value generated. This can be essential in securing future investments, or for driving behavior within an organization. This is particularly relevant when considering expanding HPC use to facilitate new business initiatives or additional research projects.

The two main measures of value used in HPC are return on investment (ROI) and business or science impact. Fairly accounting for the return side of the ROI calculation is often an arduous task and is readily open to debate and challenge. ROI is useful, especially when tensioning against other investment choices. However, ROI alone is insufficient to properly capture the value of HPC. Business or science impact is normally used to address the broader value of HPC. This drives key business decisions or capabilities that rely on HPC, or advances science leadership that is only possible with a certain HPC capability.