Sept. 4, 2016

By: Michael Feldman

Over the years, there have been a torrent of “breakthroughs” in quantum computing research. But for the first time since in many years, the technology looks to be on the verge of fulfilling its promises. Thanks to an ambitious effort at Google, quantum computing may become a reality within the next two or three years. A report at the New Scientist unravels Googles plans to commercialize the technology and attain what the company is calling “quantum supremacy.”

In a nutshell, quantum supremacy is building a quantum computer that can perform a task that no conventional digital computers can do. D-Wave’s quantum annealing machine has demonstrated much faster execution of specific algorithms, but not in a way that would demonstrate a generalized quantum computing capability. The idea of quantum supremacy is that there are certain types of problems in which the combinatorial landscape is so vast that these tasks would be impossible to run on a conventional machine in any reasonable amount of time, but would be well-suited for quantum computation.

A lot of these fall into the general category of optimization problems, where a large number of solutions are possible, but the optimal ones – the solutions that have any value -- are very difficult to find. In particular, a good-sized quantum computing machine would be able to solve problems in physics, chemistry, and cryptography that are intractable on even the largest supercomputers today. And for Google, there are numerous applications in the area of artificial intelligence (AI), which would make the machine learning work being done today look primitive by comparison. The research team at Google believes a quantum computer with as few as 50 qubits can demonstrate such a capability.

Ironically, some of the problems in constructing such a computer could be solved by having one in the first place. For example, to simulate the behavior of quantum circuits, Google borrowed some cycles on Edison, a 2.5-petaflop Cray supercomputer at NERSC, the National Energy Research Scientific Computing Center in Berkeley, California. To model a grid of 42 qubits (6 by 7) required just 70 TB of Edison’s 356 TB of memory. But bumping that grid to just 48 qubits (6 by 8) would have required 2,252 TB, which is twice as much as the largest supercomputers have today. A decent-sized quantum computer would be able to simulate its own circuitry much more efficiently.

So far Google has been pretty low-key about its quantum computing achievments, but since the company has been collaborating with the University of California, Santa Barbara (UCSB) and the University of Basque Country in Spain, a lot of the work has found its way into the public domain. They recently published a paper on a nine-qubit machine that uses superconducting quantum circuits and employs the qubits themselves to supply the system with error correction. The latter is the key piece of the technology that will enable the design to achieve a greater number of qubits, since it is error correction that has been a major stumbling block in building robust quantum computers of any size.

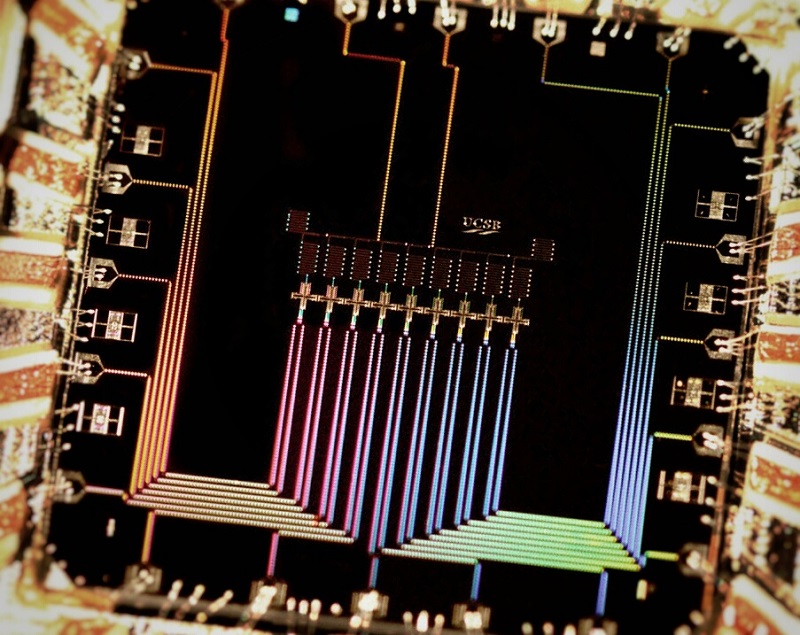

Nine-qubit processor with built-in error detection and correction. Photo credit: Julian Kelly.

Nine-qubit processor with built-in error detection and correction. Photo credit: Julian Kelly.

Google has not been making a big deal about such accomplishments, but those in the field familiar with the company’s efforts have been more vocal about the significance of the work. The money quote from the New Scientist article: “They are definitely the world leaders now, there is no doubt about it,” says Simon Devitt at the RIKEN Center for Emergent Matter Science in Japan. “It’s Google’s to lose. If Google’s not the group that does it, then something has gone wrong.”

The company’s first serious foray into quantum computing came in 2013, when it purchased a quantum computer from D-Wave, a startup that pioneered the field using a quantum annealing approach. Although that system had only limited success in proving its worth, it piqued Google’s interest enough to consider developing a much more powerful and usable machine of its own design. In 2014, Google hired physics professor John Martinis from UCSB to head its quantum computing project. He was able to advance the error correction technology that could maintain the quantum-ness of the qubits, and was able to do so in a way that makes it practical to scale to larger processors.

The hardware demonstrated in the nine-qubit prototype mentioned above drew some encouraging reviews from quantum computing experts, including Scott Aaronson, a notable researcher in the field. According to him, the qubits Martinis demonstrated were of “higher quality” than those of the D-Wave machine and represented a viable path to a scalable system. Matthias Troyer, a computational physicist at the Swiss Federal Institute of Technology in Zurich, thinks the Google effort has accelerated the timeline for practical quantum computing, noting that the company has demonstrated how they can achieve that goal. Although a 50-qubit system could be possible as early as the end of 2017, the general consensus is that the technology will need to cook for at least another two years or so.

But that could mean a working quantum computer may appear before an exascale supercomputer runs its first matrix multiplication, which is scheduled to happen around the turn of the decade. Although HPC systems today are deterministic, there are many applications run on these machines that are more probabilistic in nature, that is, at their heart they are optimization problems tailor-made for quantum computing. Certainly, such fundamental problems as molecular modeling lends itself to quantum computation. But even more traditional HPC applications, like weather forecasting, financial risk analysis, and drug discovery can be recast as optimization problems. The prospect of quantum computing at the approach of the exascale era could split high performance computing into two different paths.

All of this is yet to be written of course. But Google’s entry into the quantum computing fray is significant. It’s one thing for a small Canadian startup like D-Wave to be driving the commercialization of the technology; it’s quite another for one of the most successful tech companies of the 21st century to be doing it. Google is not alone in this regard. Intel, Microsoft, and IBM all have research projects underway to advance the technology. And you can be sure they and their stockholders are not in it to win Nobel prizes. The days of commercial quantum computing as an oxymoron may be coming to an end.