July 6, 2017

By: Michael Feldman

Chinese web giant Baidu announced it will use some of the latest NVIDIA hardware and software to augment a number of its AI-based services.

At the company’s AI developer conference, Baidu president and COO Qi said they will deploy the new Volta-generation V100 GPUs into their public cloud, offering customers NVIDIA’s latest technology for performing deep learning. The updated infrastructure will be aimed at researchers and business that are developing applications for “real-time understanding of images, speech, text and video.”

Thanks to the purpose-built Tensor Cores NVIDIA has incorporated into the V100, the new processor is able to deliver up to 120 deep learning teraflops per chip. That level of performance offers something close to Google’s second-generation Tensor Processing Unit (TPU), a 180-teraflop processor specifically engineered for deep learning work.

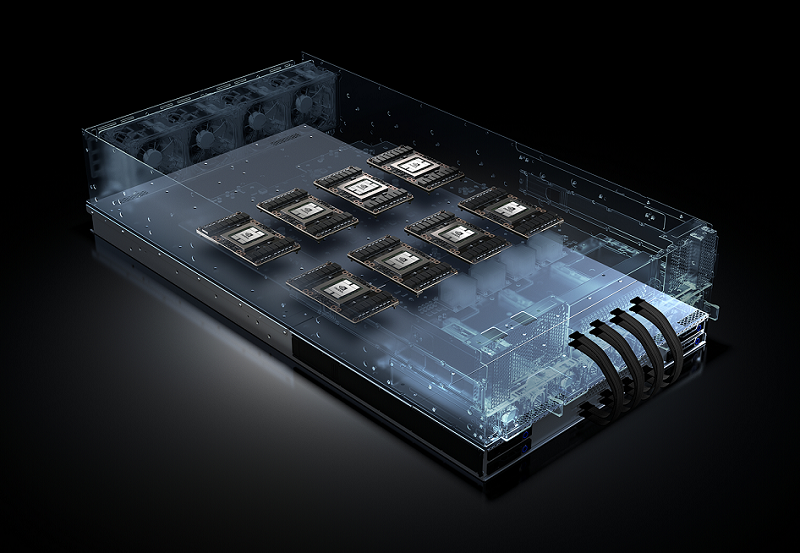

Baidu intends to deploy the V100s in servers based on NVIDIA’s HGX reference architecture. The HGX specification puts eight Tesla-class GPUs in a single box, hooked together in an NVLink-based hybrid cube to maximize GPU-to-GPU communications. An eight-GPU configuration using the V100 accelerators means it’s now possible to put nearly a petaflop – 960 teraflops, to be exact – into a single chassis.

Baidu intends to deploy the V100s in servers based on NVIDIA’s HGX reference architecture. The HGX specification puts eight Tesla-class GPUs in a single box, hooked together in an NVLink-based hybrid cube to maximize GPU-to-GPU communications. An eight-GPU configuration using the V100 accelerators means it’s now possible to put nearly a petaflop – 960 teraflops, to be exact – into a single chassis.

It was not immediately revealed who would be supplying the HGX boxes for Baidu, but since NVIDIA recently hooked up with Foxconn, Inventec, Quanta and Wistron to build this gear for hyperscale customers, the company has a number of options open to it. Microsoft, with its Project Olympus HGX-1 server, and Facebook, with its “Big Basin” system, are two early adopters of the HGX reference standard, for their respective clouds.

Baidu also intends to deploy NVIDIA P4 accelerators into their cloud. The P4 is Pascal-generation GPU, optimized for the inferencing side of deep learning (NVIDIA maintains the V100 is adept at doing both training and inferencing).

The partnership between Baidu and NVIDIA is more than just about supplying hardware though. The companies will also collaborate to optimize Baidu’s PaddlePaddle deep learning framework for the Volta GPU architecture. PaddlePaddle is to Baidu what TensorFlow is to Google, an in-house developed (and now open source) framework that underlies most of the AI codes running in their respective clouds. That includes such key applications as search, image classification, speech recognition, and language translation.

Besides the AI cloud upgrade, Baidu will also adopt NVIDIA's DRIVE PX 2 platform for the company’s self-driving car platform, known as Apollo. In addition, Baidu will add its DuerOS AI-based voice-command system to NVIDIA’s SHIELD TV.