June 22, 2017

By: Michael Feldman

For the first time in several years, AMD has brought a server chip to market that provides some real competition to Intel and its near total domination of the datacenter market. The new AMD silicon, known as the EPYC 7000 series processors, come with up to 32 cores, along with a number of features that offer some useful differentiation against its Xeon competition.

The new AMD processors are broadly aimed at the cloud and datacenter markets, including the high performance computing space. With regard to the latter, EPYC is going to have some challenges in HPC environments, but AMD definitely has a case to make for its use there. Before we dive into that subject, let’s look at the feature set of the new products.

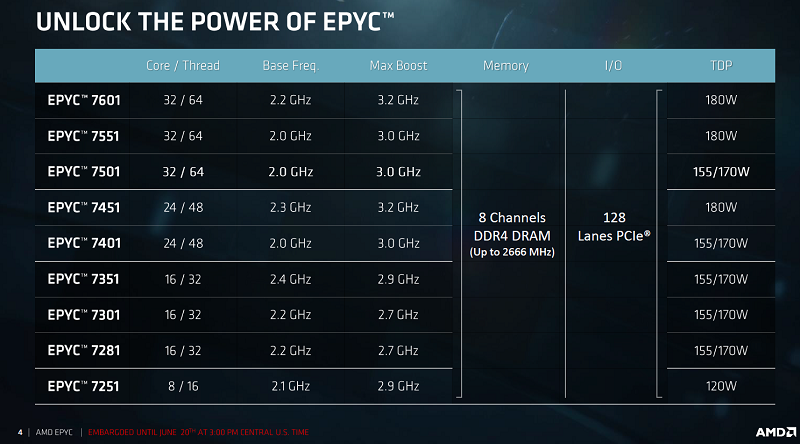

The EPYC processors launched this week come with 8 to 32 cores, and like their Xeon rivals, can execute two threads per core. AMD has decided to offer only single-socket and dual-socket versions, leaving the much smaller quad-socket-and-above market to Intel.

Clock frequencies don’t vary all that much across the range of EPYC SKUs; they start at 2.0 GHz and top out at 2.4 GHz. As you’ll note from the tables below, the frequencies aren’t necessarily higher at the lower core counts, as one might expect. The same holds true for the “max boost” clock frequencies.

EPYC also features a new interconnect known as the Infinity Fabric, which takes the place of AMD’s HyperTransport bus on the old Opterons. Except in this case, the fabric is used to connect the internals of the EPYC MCM – the individual dies that make up the chip – as well as the memory and the processors themselves (in a dual-socket setup). Socket-to-socket communication is up to 152 GB/second, while memory bandwidth tops out at 171 GB/sec.

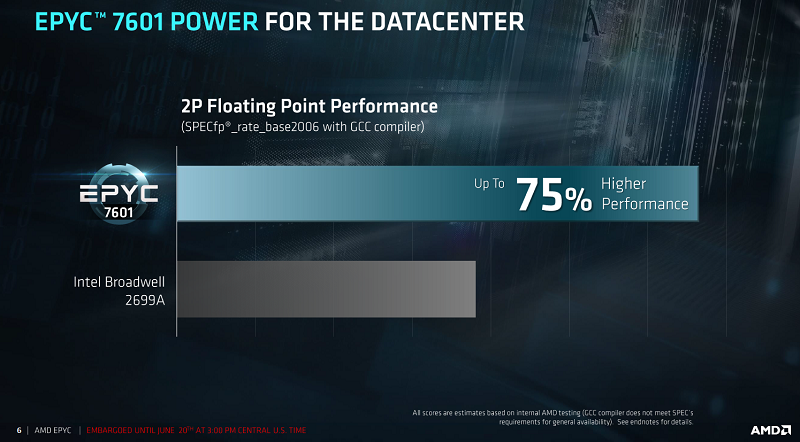

Across the EPYC product set, AMD is claiming significantly higher integer performance – 21 to 70 percent higher – compared to comparably priced Xeon “Broadwell” processors, based on SPECint_rate_base2006. And for the top-end 32-core EPYC 7601 chip, AMD says its floating point performance is 75 percent higher than that of Intel’s Broadwell E5-2699A v4 processor, based on SPECfp_rate_base2006.

No doubt, some of the better performance is due to the generally higher counts of the EPYC parts compared to the comparably priced Xeon Broadwell SKUs. But that’s sort of beside the point. The real issue is that, for the most part, EPYC processors will not be competing Broadwell, but rather against Intel’s new “Skylake” Xeon processors, which are expected to launch in July.

The Skylake design should offer better overall performance than Broadwell. More importantly, Skylake will support the AVX-512 instruction set, which will boost vector math performance (both integer and floating point) significantly compared to its predecessor. So AMD’s performance-per-dollar comparisons will have to be revisited once Skylake launches, but it’s reasonable to assume that Intel’s top-end chips will outrun the EPYC 7601 in floating point performance, even if AMD manages to offer more “value.”

AMD does appear to have a clear advantage in memory support. Each EPYC processor is equipped with eight memory channels, which supports up to 16 DIMMs of DDR4 DRAM of speeds up to 2,666 MHz. So each socket can access up to 2 TB. On a dual-socket system, that doubles to 4 TB. Two EPYC 7601 processors in a server delivers 146 percent more bandwidth on the STREAM benchmark than a comparable “Broadwell” Xeon box. And even though Skylake Xeons will supposedly support six memory channels to Broadwell’s four, it looks like EPYC’s memory advantage will prevail for the time being.

EPYC’s support for a bigger memory footprint, and by extension, higher bandwidth, is designed to offer more performance for data-demanding applications, which are particularly sensitive to the worsening bytes/flops (or ops) ratio of modern processors. AMD’s calculation here is that is that for most datacenter applications these days, memory access, rather than compute, is the limiting factor. The bigger memory footprint also makes the single-socket EPYC solution more attractive, since many customers often populate the second socket solely for the purpose of adding more memory.

The EPYC processor also offers an ungodly amount of PCIe support – 128 lanes per socket, as compared to the expected 48 lanes for the Skylake Xeon processor. 128 lanes is enough to attach four to six GPUs or up to 24 NVMe drives. This also buttresses the case for single-socket servers, since, once again, you can avoid using the other socket to get access to additional devices. In fact, in a dual-socket configuration, you get the same 128 PCIe links, since the Infinity Fabric uses 64 of the PCIe links to connect to the other processor.

In summary, while even the fastest EPYC processors are unlikely to outperform the top Skylake parts in pure computational horsepower, from a performance per dollar or performance per watt per dollar, they may be extremely competitive. And for memory capacity and performance, as well as PCIe connectivity, they will outshine their Intel counterparts. Apparently, that was enough to attract Baidu and Microsoft, who are early customers of record

For HPC use, EPYC may appear to be something of a tradeoff. It’s worth considering, though, that in 2017, the cheapest and most efficient flops are found on GPUs or other manycore processor, and not on multicore CPUs (with the caveat that not all flops are equally accessible to every application across these platforms). In addition, for many HPC applications, memory access is the most critical bottleneck.

With that in mind, AMD does have a high performance story to tell. It’s regrettable that the company did not use the recent ISC conference to tell it. Instead, the EPYC launch was announced in Austin, Texas, during the company’s Financial Analyst Day on June 20, and no one from the server side was dispatched to Frankfurt, Germany this year. (AMD did talk about their new Radeon Instinct GPUs for deep learning work at ISC, and we’ll be reviewing those in an upcoming article.)

It’s certainly understandable the AMD is focusing on the cloud and hyperscale space for the initial EPYC launch, given that it represents a bigger and faster growing market than that of HPC. But as Intel discovered awhile ago, being a leader at the high end of the market has downstream benefits as well.

The next time the HPC faithful are gathered in large numbers will be in November at SC17, and by that time the Skylake Xeon processors will be available for head-to-to-head comparisons on real applications. It would serve AMD well to be ready to talk about their HPC ambitions for EPYC at the Denver event.