Dec. 13, 2016

By: Michael Feldman

AMD has announced Radeon Instinct, a new line of GPUs aimed at accelerating machine learning applications in the datacenter. Designed to go up against the best NVIDIA can offer, the Instinct products deliver lots of performance and a number of high-end features. The products were unveiled at AMD Technology Summit, which took place last week.

Up until now, AMD had ceded the machine learning acceleration market to NVIDIA, which over the last few years has developed a series of GPUs designed with specific capabilities aimed at this application space – most notably, reduced precision arithmetic. NVIDIA’s latest offerings in this area, include the P4 and P40 GPUs, for machine learning inferencing, and the P100 GPU, for machine learning training.

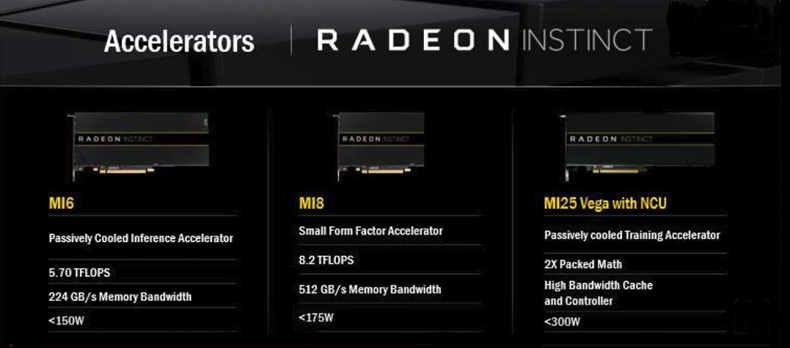

AMD’s initial Radeon Instinct product set consists of the MI6, MI8, and MI25 – the MI, if you were wondering, stands for Machine Intelligence. All are passively cooled cards and come with peer-to-peer support. The latter is accomplished via the BAR (base address register) technology, which allows the GPUs to talk directly to one another on the network without the need for host CPU involvement. In addition, all the Instinct products are equipped with AMD’s multiuser GPU technology, known as MxGPU, which allows the hardware to be virtualized. Typically, GPU virtualization is employed for graphics processing in virtual desktop environments, but AMD believes there’s use for it in machine learning set-ups as well.

The MI6 is the entry-level Radeon Instinct GPU. It’s based on the “Polaris” architecture and is aimed at deep learning inferencing applications. The MI6 tops out at 5.7 teraflops of FP16 (half precision) peak performance and delivers 224 GB/sec of memory bandwidth across its 16 GB of memory. Power draw is under 150 watts, as befits an inferencing chip that for web-sized datacenters.

The MI8 is based on the “Fiji” architecture and is built as a small form factor device. It delivers 8.2 FP16 teraflops. Memory is provided by 4GB of high bandwidth memory (HBM), providing 512 GB/sec of bandwidth. Although this GPU is also aimed at inferencing applications, it can serve HPC workloads as well. That suggests there is plenty of 32-bit and 64-bit capability available in the hardware, although there is no datasheet available to confirm this. This device draws less than 175 watts.

The MI25 represents the high-end Instinct part and is based on the new “Vega” architecture. It is aimed at training the neural networks used in many machine learning applications. Although no flops metric was offered by AMD, based on the naming convention (and the description of early server hardware that incorporates this device), the MI25 will deliver about 25 FP16 teraflops of performance. No memory specs were provided either, but given its high-end nature, it will likely come equipped with high bandwidth memory of some sort, probably 8 GB as a minimum.

Source: AMD

Source: AMD

Not surprisingly, the MI25 draws the most power of the bunch, but still manages to stay under 300 watts. The MI25 will compete head-to-head with the P100 Tesla, NVIDIA’s flagship GPU for training neural networks. It too maxes out at 300 watts. But at 21 FP16 teraflops, the P100 delivers somewhat less peak performance than its new cousin at AMD.

Although the peak flops metrics are relatively close, AMD showed a chart in which both the MI8 and MI25 were beating NVIDIA’s Titan-X GPU on the DeepBench GEMM test, a benchmark used to approximate performance on deep learning types of codes. In fact, the MI25 card outperformed the Titan-X by about 50 percent, despite being only about 20 percent faster in peak performance. The Titan-X uses the same chip as the higher end P100, but lacks the high bandwidth memory of its Tesla counterpart. Regardless if it was the memory, the specific benchmark implementation, or some other factor that contributed to the quicker times on the MI25, performance on real life applications will be the real test for the new GPU.

Some of those application results will probably be on Inventec gear. Inventec is an ODM partner of AMD’s, which is building server hardware with the MI25 card. That includes the K8888 G3, a 100-teraflop 2U server, with dual Xeon CPUs and four MI25 GPUs. A 16-GPU box, under the name of “Falconwitch,” quadruples that to 400 teraflops. A rack of these can be built with up to 120 of the MI25 devices, delivering an aggregate performance of three FP16 petaflops.

And if you want an all-AMD server, a Zen CPU-Radeon Instinct GPU platform will also be available. That one will link a single “Naples” Zen CPU with multiple GPU cards -- as many as 16 of them. No word yet on who would be offering such a server, but Supermicro is one likely possibility.

To support the new hardware, AMD has developed a machine learning software stack optimized for their Instinct GPUs. One important component, known as MIOpen, contains a GPU library of routines to accelerate various machine learning operations like convolutions, pooling, activation functions, and normalization. AMD is offering it as a free, open source package.

AMD has also added Radeon implementations of various deep learning frameworks to their open source software platform ROCm (Radeon Open Compute). Supported frameworks include Caffe, Torch 7, Tensorflow CNTK, Chainer, and Theano. ROCm also comes with domain-specific compilers for linear algebra and tensors, as well as an open compiler and language runtime.

In general, AMD is using the open aspect of its support software to try to lure customers away from NVIDIA, with its more proprietary CUDA stack. Nevertheless, because so much code is already written in CUDA, AMD has also provided a tool, known as Heterogeneous-compute Interface (HIP), to convert CUDA code to common C++, which can then be run through AMD’s HCC compiler to produce executable code for Radeon silicon. The conversion isn’t 100 percent, but it should speed up the retargeting process significantly. For customers with an existing code base of machine learning applications written in CUDA, the HIP tool could prove invaluable to those looking to switch vendors.

AMD indicated it’s giving top priority to the cloud/hyperscale market as it rolls out the Instinct products. That certainly makes sense, given that this is currently the largest customer base for these accelerated machine learing. Companies like Google, Amazon, Baidu and others are rapidly build out this infrastructure as they expand their AI capabilities for cloud customers. Google and Alibaba already use Radeon technology (or will soon do so), and may end up moving over to the Instinct parts in the future. Beyond the hyperscalers, AMD is also looking at some initial engagements in financial services, energy, life science, and automotive.

Radeon Instinct cards are expected to start shipping in the first half of 2017. At that point, volume shipments of the NVIDIA P100 will have already commenced, as will shipments of the P4 and P40 parts. Intel meanwhile will be introducing its own machine learning processors in 2017, namely Knights Mill and Lake Crest. That will give hyperscalers and other customers deploying machine learning gear a lot more choice in the coming year.