June 28, 2017

By: Michael Feldman

AMD is looking to penetrate the deep learning market with a new line of Radeon GPU cards optimized for processing neural networks, along with a suite of open source software meant to offer an alternative to NVIDIA’s more proprietary CUDA ecosystem.

The company used the opportunity of the ISC17 conference to lay out its deep learning strategy and fill in a few more details on both the hardware and software side. In a presentation titled Deep Learning: The “Killer” App for GPUs, AMD’s Mayank Daga admitted that the company has fallen behind in this area, but claimed its new Radeon Instinct line it will roll out later this year is on par with the best the competition has to offer.

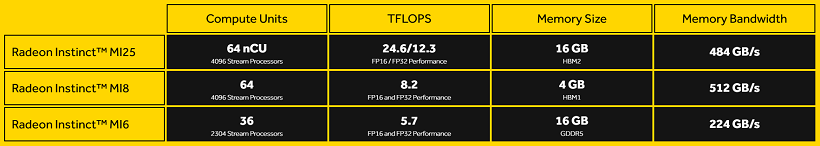

The initial Radeon Instinct GPUs – the MI25, MI8, and MI6 – were first announced back in December 2016 and reviewed here by TOP500 News. All of these accelerators provide high levels of 16-bit and 32-bit performance – the most common data types for deep learning codes. Apparently, there is some 64-bit capability buried in them as well, but not enough to be useful for more traditional HPC applications. Integrated high bandwidth memory (HBM2) is included in the MI25 and MI8 packages. The three GPUs spec out as follows:

While all of these GPUs are focused on the same application set, they cut across multiple architectures. The MI25 is built on the new “Vega” architecture, while the MI8 and MI6 are based on the older “Fuji” and “Polaris” platforms, respectively.

The top-of-the-line MI25 is built for large-scale training and inferencing applications, while the MI8 and MI6 devices are geared mostly for inferencing. AMD says they are also suitable for HPC workloads, but the lower precision limits the application set principally to some seismic and genomics codes. According to an unnamed source manning the AMD booth at ISC, they are planning to deliver 64-bit-capable Radeon GPUs in the next go-around, presumably to serve a broader array of HPC applications.

For comparison’s sake, NVIDIA’s P100 delivers 21.2 teraflops of FP16 and 10.6 teraflops of FP32. So from a raw flops perspective, the new MI25 compares rather favorably. However, once NVIDIA starts shipping the Volta-class V100 GPU later this year, its 120 teraflops delivered by the new Tensor Cores will blow that comparison out of the water.

A major difference is that AMD is apparently building specialized accelerators for deep learning inference and training, as well as HPC applications, while NVIDIA has abandoned this approach with the Volta generation. The V100 is an all-in-one device that can be used across these three application buckets. It remains to be seen which approach will be preferred by users.

The bigger difference is on the software side for GPU computing. AMD says it plans to keep everything in its deep learning/HPC stack as open source. That starts with the Radeon Open Compute platform, aka ROCm. It includes things such as GPU drivers, a C/C++ compilers for heterogeneous computing, and the HIP CUDA conversion tool. OpenCl and Python are also supported.

New to ROCm is MIOpen, a GPU-accelerated library that encompasses a broad array of deep learning functions. AMD plans to add support for Caffe, TensorFlow and Torch in the near future. Although everything here is open source, the breadth of support and functionality is a fraction of what is currently available to CUDA users. As a consequence, the chipmaker has its work cut out for it to capture deep learning customers.

AMD plans to ship the new Radeon Instinct cards in Q3 of this year.